Your Practical Guide to the EU AI Act

The EU AI Act is the world’s first comprehensive law on artificial intelligence. It establishes a legal framework for AI systems based on a straightforward principle: the greater the risk an AI system presents, the stricter the rules it must adhere to. The goal isn't to stifle innovation but to ensure that any AI developed, used, or sold in the European Union is safe, transparent, and respects fundamental human rights.

What Is the EU AI Act Really About?

It’s helpful to view the EU AI Act less as a restrictive legal document and more as a set of safety standards, similar to those we have for consumer products. A child's toy is not subject to the same level of scrutiny as a life-saving medical device because their potential for harm is vastly different.

The Act applies this same risk-based logic to AI. It recognizes that a recommendation engine suggesting a movie is fundamentally different from an AI system making hiring decisions or diagnosing a medical condition. By classifying AI applications into distinct risk categories, the law wisely concentrates the most stringent regulations on the areas where the stakes are highest.

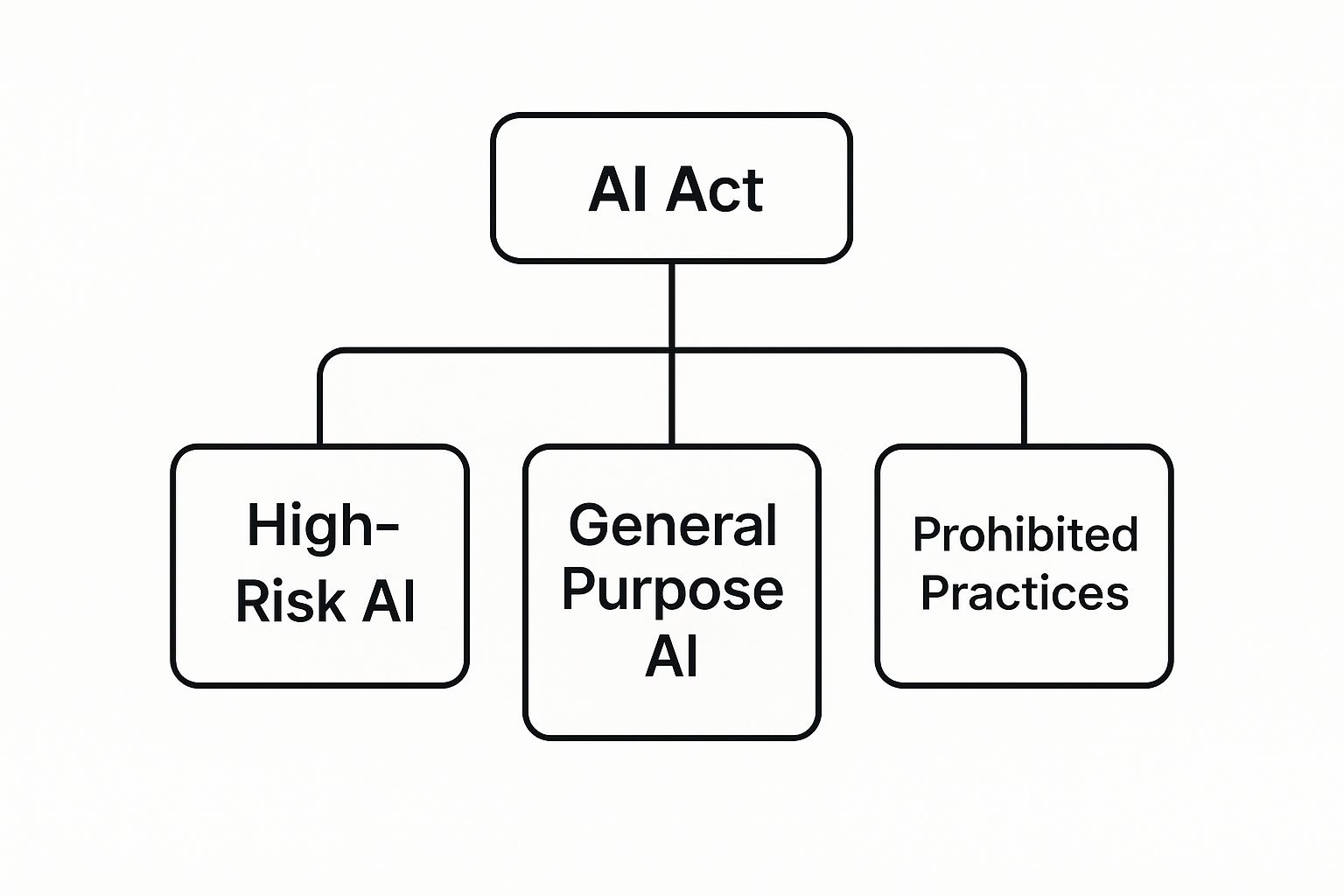

For a quick overview of the Act's main components, here's a look at its core structure.

The EU AI Act At a Glance

| Component | Description |

|---|---|

| Primary Objective | To ensure AI systems placed on the European market are safe and respect fundamental rights, while fostering investment and innovation. |

| Scope | Applies to providers, importers, distributors, and users of AI systems within the EU, regardless of where they are based. |

| Core Mechanism | A risk-based approach that classifies AI into four tiers, with obligations proportional to the level of potential risk. |

This tiered system is the foundation upon which the entire regulation is built.

The Core Goal: A Risk-Based Approach

With its official entry into force, the EU AI Act has set a global precedent. Its core mission is to regulate AI by categorizing systems according to risk, thereby creating a predictable and trustworthy environment for innovation across Europe. For details on how member states are adopting the framework, you can explore the national implementation plans.

This risk-based framework is the heart of the legislation. It creates four distinct levels to classify AI systems, making sure the regulatory burden fits the potential societal impact.

- Unacceptable Risk: These are AI practices considered a clear threat to people's safety, rights, and livelihoods. Examples include government-run social scoring or AI that manipulates human behavior. These systems are banned.

- High-Risk: This critical category covers AI used in sensitive areas like healthcare, education, critical infrastructure, and law enforcement. These systems face stringent requirements, including rigorous assessments before they can be placed on the market.

- Limited Risk: Here, the primary obligation is transparency. For systems like chatbots or deepfakes, users must be clearly informed that they are interacting with an AI system.

- Minimal Risk: This category includes the vast majority of AI systems, such as spam filters or AI in video games. These face no new legal obligations under the Act.

By focusing its regulatory power on high-risk applications, the AI Act aims to build public trust without stifling the development of low-risk technologies that drive progress and simplify daily life.

Ultimately, the purpose of the AI Act is to establish a stable and secure environment for both AI developers and citizens. It provides a clear legal roadmap that protects fundamental rights while giving businesses the confidence to invest in and deploy AI solutions responsibly within the vast European single market. With this foundation, we can now examine what these risk tiers mean for your business in practice.

Breaking Down the Four AI Risk Tiers

Think of the EU AI Act as a pyramid. At the very top, where the point is sharpest, you’ll find the AI systems that pose the greatest danger to our fundamental rights. As you move down toward the wide, stable base, the level of risk—and therefore the intensity of the rules—eases up considerably.

This risk-based structure is the single most important concept to grasp. Not all AI is created equal, and the law gets that. By sorting artificial intelligence into four distinct tiers, the AI Act focuses its strongest regulations only where they're absolutely necessary, letting innovation thrive in lower-risk areas.

The hierarchy below shows how the AI Act is built around these risk categories.

This visual makes it clear: prohibited practices and high-risk AI are the core focus of the regulation. Let's dig into what each of these tiers actually means for you.

Tier 1: Unacceptable Risk

This is the top of the pyramid, reserved for AI that represents a clear threat to people's safety, livelihoods, and basic rights. The EU has drawn a hard line here. Any AI system falling into this category is outright banned from the European market. Period.

There are no loopholes or workarounds. These are digital red lines that simply cannot be crossed. The ban list includes:

- Government-led social scoring: Systems that rank citizens based on their social behavior or personal traits are strictly forbidden.

- Subliminal manipulation: AI that uses hidden techniques to warp a person's behavior in a way that could cause physical or psychological harm is out.

- Exploiting vulnerabilities: This includes AI designed to target specific weaknesses of a group due to their age, or a physical or mental disability.

- Real-time remote biometric identification: The use of systems like live facial recognition in public spaces by law enforcement is banned, with only a few very narrow exceptions for serious crimes.

These prohibitions are among the first provisions of the AI Act to come into force, marking a significant step in the regulation's implementation.

Tier 2: High-Risk

This is probably the most complex and impactful category for businesses. High-risk AI systems aren’t banned, but they are subject to a tough set of rules both before and after they hit the market. We're talking about systems where a failure could have serious consequences for someone's health, safety, or fundamental rights.

The high-risk category is where the AI Act truly flexes its regulatory muscle. Compliance here isn't just about ticking boxes; it's about fundamentally rethinking how AI is designed, documented, and monitored for its entire lifespan.

You’ll find high-risk AI in critical sectors. Some clear-cut examples include:

- Medical devices: AI software used for diagnostics, like analyzing medical scans to spot diseases.

- Critical infrastructure: Systems managing essential utilities like water, gas, and electricity grids.

- Employment and recruitment: AI tools that screen résumés, filter job applicants, or influence promotion decisions.

- Education: Systems that determine access to schools or universities, or that grade student exams.

- Law enforcement: AI used to assess the reliability of evidence or predict the risk of someone reoffending.

Providers of these systems have a lot of homework to do. They must conduct conformity assessments, build robust risk management systems, ensure high-quality data governance, and maintain incredibly detailed technical documentation. To get a better handle on these applications, you can learn more about classifying various AI systems and what the new rules mean for them.

Tier 3: Limited Risk

Moving down the pyramid, we arrive at AI systems that pose a "limited risk." For this group, the name of the game is transparency. The goal is simple: make sure people know when they are interacting with an AI.

This is all about giving users enough information to make an informed choice about whether to keep engaging. Common examples include:

- Chatbots: Any bot talking to customers must clearly state that the user is communicating with an AI, not a person.

- Deepfakes and generated content: Any content that has been artificially created or manipulated—like images, audio, or video—must be labeled as such.

- Emotion recognition systems: If an AI is being used to identify or infer emotions, people have to be told that they're being exposed to such a system.

The rules here are pretty straightforward. There are no pre-market assessments or complex paperwork—just clear and timely disclosure to the user.

Tier 4: Minimal Risk

Finally, we hit the base of the pyramid: minimal or no-risk AI. This category covers the vast majority of AI systems we use every day. To avoid killing innovation, the AI Act deliberately takes a hands-off approach here.

These systems present little to no threat to our rights or safety. You see them everywhere:

- Spam filters in your email.

- Recommendation engines on Netflix or Spotify.

- AI-powered inventory management in a warehouse.

- AI features inside video games.

For these minimal-risk applications, there are no new legal obligations under the AI Act. Companies developing or using them can carry on as before. While some may choose to voluntarily adopt codes of conduct to build trust, it's not a legal requirement.

How to Prepare for AI Act Compliance

Knowing the AI Act’s risk categories is one thing, but actually turning that knowledge into a practical plan is a whole different ballgame. For any business building or using high-risk AI, getting to compliance means taking a structured and proactive approach. Simply waiting for the deadlines to hit is not an option.

Think of it like building a house. You wouldn’t just start throwing up walls without a solid foundation and a detailed blueprint. In the same way, AI Act compliance demands careful planning, starting with a full inventory of your AI systems so you can get a clear picture of your specific duties.

The stakes for getting this wrong are incredibly high. Non-compliance can lead to staggering penalties, with fines climbing up to €35 million or 7% of your company's global annual turnover—whichever is higher. That’s not a slap on the wrist; it’s a clear signal that the EU is serious about enforcing these rules.

Begin with an AI System Inventory

Your first step on the path to compliance is simply knowing what you’re working with. You can’t assess the risk of AI systems you aren’t even aware of. This means you need to conduct a thorough inventory of every single AI tool your organization develops, deploys, or integrates into its products.

This audit needs to be detailed. Go beyond just the name of the system and capture its purpose, the data it processes, and exactly how it fits into your business operations. Once you have that complete list, you can move on to the crucial task of classifying each system by risk.

By mapping out your entire AI ecosystem, you lay the essential groundwork for your compliance strategy. This first step is all about prioritizing your efforts and focusing your resources on the systems that will fall into that all-important high-risk category.

The Core Duties for High-Risk AI

If your inventory uncovers a high-risk AI system, a specific and demanding set of obligations immediately kicks in. These requirements are the very heart of the AI Act’s framework, designed to ensure safety, transparency, and accountability from start to finish.

Your compliance checklist should include these key actions:

Establish a Risk Management System: This isn’t a one-and-done check. You need to build and maintain an ongoing process to identify, evaluate, and mitigate any risks your AI might pose to health, safety, or fundamental rights. It’s a continuous cycle of assessment and adjustment.

Ensure Robust Data Governance: The quality of the data you use is non-negotiable. High-risk systems must be trained, validated, and tested on datasets that are relevant, representative, and free from errors and biases. This demands meticulous oversight of how you collect and manage your data.

Maintain Detailed Technical Documentation: You must prepare comprehensive technical documentation before your system ever hits the market. This file should include everything from the AI's intended purpose and architecture to its performance metrics and human oversight measures. This is what authorities will comb through during an audit. You can get a better sense of what's needed by exploring different types of software for compliance.

Implement Human Oversight: High-risk AI can't be left to its own devices. Your systems must be designed to allow for real human oversight. This means a person must have the ability to intervene, shut down, or override the system if it starts acting unexpectedly or creating a risk.

Conduct a Conformity Assessment: Before your high-risk AI can be sold or used in the EU, it has to pass a conformity assessment. This is where you prove that your system meets all the Act's requirements, demonstrating that you’ve done your homework and the system is ready for prime time.

Post-Market Monitoring and Beyond

Compliance doesn't stop at launch. The AI Act also requires a post-market monitoring system to continuously collect and analyze data on how your high-risk AI performs out in the real world.

This system should be designed to proactively spot emerging risks or incidents. If a serious issue crops up that violates fundamental rights, you are obligated to report it to the relevant national authorities immediately. This ongoing vigilance is what ensures AI systems remain safe and fair long after they've left the lab. Getting ready for these duties now isn't just about dodging fines; it’s about building trust and securing your technology’s future in the European market.

Why the AI Act Matters Globally

Even though the AI Act was born in Europe, its impact is being felt thousands of miles away. Think back to what happened with the General Data Protection Regulation (GDPR). It started as an EU law but quickly became the gold standard for data privacy worldwide. The AI Act is on the same path.

This is what's known as the "Brussels Effect." The logic is simple. Any company, whether in Silicon Valley or Singapore, that wants to sell its AI-powered products to the EU’s massive consumer base has to play by EU rules.

It’s just bad business sense to create different versions of your product for every market. Instead, companies find it far more efficient to build one version that meets the EU's strict standards and then roll it out globally. In effect, EU regulations get exported, setting a new bar for everyone.

Getting ahead of this curve isn’t just about compliance; it's a smart strategic move for any company with global ambitions.

The Global Regulatory Patchwork

As countries around the world race to get a handle on AI, a jumble of different rules is starting to emerge. There's no single global consensus, which means organizations operating internationally need to pay close attention to the shifting landscape.

The EU's approach stands out. It's built on a foundation of protecting fundamental human rights, carefully balancing the drive for innovation against the potential for real-world harm. This isn't the case everywhere. Other major powers are charting their own courses, driven by different economic priorities and cultural values.

This creates a complicated map for businesses to navigate. An AI system that’s perfectly fine in one country could be heavily restricted—or even banned outright—in another. Understanding this regulatory patchwork has become a crucial part of doing business on the world stage.

The AI Act establishes a clear, predictable legal framework, which can actually foster trust and investment. By setting a high bar for safety and ethics, it gives businesses a clear target to aim for, reducing legal uncertainty.

The global push to regulate is undeniable. Since 2016, legislative mentions of AI have skyrocketed. From 2023 to 2024 alone, they jumped by 21.3% across 75 countries—that’s a ninefold increase over the past eight years. It's clear that governments everywhere agree AI can't just operate in a legal Wild West. You can dive deeper into these global AI Act implementation trends to see how things are shaping up.

Comparing International Approaches

To really grasp why the AI Act is such a big deal, it helps to see how the EU’s strategy compares to what other global players are doing. Each region has its own flavor, shaped by its unique political and economic goals.

Global AI Regulatory Approaches Compared

The table below breaks down the different philosophies guiding AI governance in the EU, the United States, and China.

| Region | Regulatory Approach | Primary Focus |

|---|---|---|

| European Union | Comprehensive, horizontal, and risk-based legislation. | Protecting fundamental rights, safety, and establishing clear legal certainty for trustworthy AI. |

| United States | Sector-specific and market-driven, emphasizing innovation. | Fostering competitive advantage and technological leadership through voluntary frameworks and agency-specific rules. |

| China | State-driven and centrally controlled, with a focus on specific applications. | Promoting national strategic goals, social stability, and state oversight of AI development and deployment. |

This side-by-side comparison makes the EU's position crystal clear. While the U.S. opts for a lighter, more hands-off approach to spur innovation and China prioritizes state control, the EU has planted its flag firmly on the side of human rights and safety.

For any company serving customers across the globe, this makes the EU's framework the one to watch. It's fast becoming the de facto standard, ensuring that products are built to succeed in the world’s most demanding regulatory market.

Understanding Key Dates and Deadlines

Getting compliant with the AI Act isn't like flipping a switch. The rules don't all come online at once. Instead, they’re being rolled out in phases over three years, giving everyone a chance to get their house in order as different requirements become law.

This staggered timeline means you need to have a calendar handy. Knowing what's due and when is crucial for planning your compliance strategy and making sure you're not caught off guard. The first major deadlines are fast approaching.

The First Wave: Prohibited AI Practices

The first set of rules to take effect will be the ban on AI systems that pose an "unacceptable risk." These are the applications the EU considers a direct threat to our fundamental rights.

This will be a big first step. It will make it illegal to bring any new systems to the EU market that engage in practices like: * Government-run social scoring systems. * AI designed to manipulate people into harmful behavior. * Systems that exploit the vulnerabilities of certain groups. * Most uses of real-time biometric tracking by law enforcement in public spaces.

A particularly relevant ban for businesses is the prohibition on using AI to infer emotions in the workplace, except in very specific safety-related situations. This first deadline sends a powerful message: the most dangerous uses of AI are off the table.

Key Milestones on the Horizon

Looking ahead, the timeline gets more complex, especially for anyone building or using powerful AI models. Each approaching date adds another layer of the AI Act, demanding new actions and adjustments.

For example, a new set of rules for General-Purpose AI (GPAI) models will come into play. If you're a provider of a large language model, for instance, you’ll have a new list of responsibilities.

This is a major shift. We're moving from just banning specific use cases to regulating the core technology itself. The burden of responsibility now falls squarely on the model developers at the very top of the AI food chain.

These new GPAI duties include keeping extensive technical documentation, sharing key information with companies that build on your model, and publishing clear summaries of your training data. As you can imagine, actually doing all of this presents some real-world challenges.

Implementation Challenges and Your Strategy

The rollout of the AI Act hasn't been perfectly smooth. A good example is the potential delay in publishing guidance, such as a Code of Practice for General-Purpose AI (GPAI) models. Such delays can make it tricky for businesses to prepare for upcoming deadlines. For more on this, you can discover insights on potential EU AI Act delays and global regulation.

Further down the road, another wave of rules will activate transparency obligations for things like chatbots and deepfakes. Finally, the most extensive requirements for high-risk AI systems will become fully enforceable. This implementation roadmap is clear, but it's definitely a marathon, not a sprint.

Common Questions About the EU AI Act

As the world’s first major law for artificial intelligence, the AI Act has stirred up a lot of questions. For businesses trying to make sense of their new responsibilities, a few key points of confusion seem to pop up again and again. Getting clear, straightforward answers is the first step toward building a compliance strategy you can feel confident in.

This section gets right to the point, tackling the most frequent and pressing questions we hear from organizations both inside and outside the European Union. From figuring out who the law actually applies to, to understanding the potential financial fallout of getting it wrong, these answers will help you navigate the realities of this landmark regulation.

Who Does the EU AI Act Apply To?

There's a common myth that the AI Act only matters for European companies. The truth is, its reach is global. The law has what’s called an "extraterritorial scope," which is a formal way of saying it applies to any organization that puts an AI system on the EU market, regardless of where that company is headquartered.

This means a software developer in the United States, a tech firm in Asia, or a service provider anywhere else in the world has to comply if their AI-powered products are available to customers in an EU member state. The rules are even triggered if the output of an AI system is simply used within the EU.

This is a textbook example of the "Brussels Effect." Just as GDPR became the unofficial global standard for data privacy, the AI Act is quickly setting the international benchmark for AI safety and governance.

Because of this long reach, any company with an international footprint needs to take a hard look at its obligations under the Act. Assuming you're exempt just because you're not based in Europe is a risky and flawed bet.

What Are the Penalties for Non-Compliance?

Let's be blunt: the financial penalties for violating the AI Act are severe. They were designed that way to make sure organizations take their new duties seriously. The fines are tiered based on how serious the violation is, with the heaviest penalties reserved for the most flagrant offenses.

The potential fines can be absolutely staggering:

- Prohibited AI: Using an AI system that falls into the "unacceptable risk" category can lead to fines of up to €35 million or 7% of your company's total worldwide annual turnover—whichever is higher.

- Other Key Obligations: Failing to meet the requirements for high-risk AI systems carries penalties of up to €15 million or 3% of worldwide turnover.

- Supplying Incorrect Information: Even just providing incomplete or misleading information to the authorities can result in a fine of up to €7.5 million or 1.5% of turnover.

These numbers make it crystal clear that non-compliance isn’t just a legal headache; it's a massive financial risk that could seriously impact a company's bottom line and public reputation.

What Is a General-Purpose AI Model?

The AI Act creates a special bucket for what it calls General-Purpose AI (GPAI) models. The easiest way to think of these is as powerful, foundational AI—like the models that generate text, create images, or write code. They aren't built for just one narrow task but can be adapted for a huge range of different applications.

The Act places specific transparency rules on all GPAI models. This includes keeping technical documentation up-to-date and providing clear information to the downstream developers who build applications on top of them.

But it goes a step further. The law also identifies a subset of GPAI models that pose "systemic risk." These are the ultra-powerful models, often identified by the colossal amount of computational power used to train them. These specific models face much tougher rules, including mandatory model evaluations, assessing and mitigating systemic risks, and ensuring top-tier cybersecurity.

How Can My Business Start Preparing Now?

Just because the AI Act is rolling out in phases doesn't mean you can afford to wait. The time to start preparing is now, not when the deadlines are looming. A proactive approach is the only way to ensure you hit every milestone and weave compliance smoothly into your daily operations.

Here are four practical steps you can take today:

- Conduct an AI Inventory: Start by making a complete list of every AI system your business develops, deploys, or simply uses. You can't comply with the law if you don't know what you have. This inventory is your foundation.

- Perform a Risk Classification: Go through your list and assign a preliminary risk classification to each system based on the Act's four tiers. This will immediately show you which systems are considered high-risk and need the most attention.

- Assign Internal Responsibility: Designate a person or a team to spearhead your AI Act compliance efforts. It’s also time to start educating key departments—from legal and IT to product development—on what's coming.

- Develop a Formal Strategy: Keep an eye on official updates from the EU AI Office and think about working with experts to build a formal compliance roadmap. For complex cases, it often helps to get specialized advice to make sure your strategy is solid. If you have specific questions about your obligations, you can contact us for guidance on the AI Act.

By taking these steps now, you can turn compliance from a source of anxiety into a manageable, strategic part of your business.

Ready to stop worrying about compliance and start focusing on innovation? ComplyACT AI guarantees your organization can meet the EU AI Act's requirements in just 30 minutes. Our platform automates AI system classification, generates audit-ready technical documentation, and provides continuous monitoring to keep you compliant. Avoid the risk of massive fines and join leaders like DeepMind and Siemens who trust us to make compliance simple. Get started with ComplyACT AI today.