A Guide to AI Compliance and the EU AI Act

At its core, AI compliance is all about following the rules—the laws, regulations, and ethical standards that govern how artificial intelligence is built and used. This isn't just about best practices anymore. With major legislation like the EU AI Act leading the charge, what was once a set of guidelines is now a framework of legal obligations for businesses.

The New Reality of AI Compliance

We've entered a new era where AI innovation and regulation are two sides of the same coin. The days of treating AI ethics as a philosophical debate are gone. Today, AI compliance is a concrete, bottom-line business requirement. Around the world, governments are rushing to put legal guardrails in place to manage both the promise and the perils of AI, with the EU AI Act setting the global pace.

This isn't about slowing down progress; it's about building trust from the ground up. Think of it like this: building codes don't stop us from constructing skyscrapers, they make sure those skyscrapers are safe. AI compliance does the same thing, providing a blueprint for building AI that is reliable, fair, and trustworthy. These rules are there to make sure AI systems don't harm people, amplify bias, or run wild without human oversight.

Why Compliance Is Now a Strategic Priority

So, what's driving this global push? It's the simple recognition that AI's influence is too massive to be left to chance. From the algorithms that decide who gets a job interview to the tools that help doctors diagnose diseases, AI is making calls that deeply impact our lives. Without clear rules of the road, the risk of things going wrong is just too high.

The EU AI Act is a perfect example of this new reality in action. It’s a landmark law that sets a global benchmark by sorting AI systems based on their potential for harm. The higher the risk, the stricter the rules. This forces companies to stop thinking about safety and fairness as an afterthought and start baking it into their AI from day one.

AI compliance has officially moved out of the legal department's basement and into the boardroom. It's now a core part of corporate strategy that shapes product development, market access, and brand reputation—turning what seems like a hurdle into a real competitive edge.

Bridging the Gap Between Ambition and Readiness

It's clear that businesses are getting the message. The drive to adopt AI is intense, but so is the need to proceed with caution. A recent survey revealed that while 72.5% of companies are planning to weave AI into their compliance work soon, a massive 95.6% admit they aren't very confident they can manage it effectively. For more on this, check out the 2025 State of AI in Compliance Report.

This data shines a spotlight on a critical disconnect between ambition and actual preparedness. Many organizations are racing to implement AI without having the right governance in place. Building a solid AI compliance program, with a clear focus on regulations like the EU AI Act, is the only way to close that gap and ensure that as your AI capabilities expand, your control over them does too.

Decoding the EU AI Act: A Global Benchmark

As artificial intelligence weaves its way into every corner of our lives, the world has been asking a big question: how do we govern it? The European Union has provided a decisive answer with the EU AI Act. This isn't just some regional directive; it’s the world's first comprehensive legal rulebook for AI, and it’s setting a standard that businesses everywhere need to understand.

At its core, the Act is a balancing act. It's designed to spark AI innovation while making sure these powerful technologies respect our fundamental rights, safety, and democratic values.

Think of it as the GDPR, but for the AI era. The same way GDPR rewrote the rules for data privacy globally, the EU AI Act is creating a new gold standard for AI compliance. The goal is to build a world where AI systems are transparent, traceable, and always have a human in the loop, creating a bedrock of trust between us and the tech we use.

The Act's Global Reach Explained

Don't make the mistake of thinking this only applies to companies based in Europe. The EU AI Act has what's called extraterritorial scope, and it's a game-changer. Simply put, the law follows the AI system, not the company.

This means if you put an AI system on the market in the EU, or if its output is used by someone in the EU, you're on the hook. An American software company selling an AI diagnostic tool to a hospital in Berlin? They have to comply. A startup in Canada whose customer service chatbot is used by shoppers in Paris? They fall under the Act's rules, too. This massive reach makes understanding the EU AI Act an absolute must for any global business working with AI.

The European Union is effectively setting the pace for global AI regulation. This landmark law uses a risk-based approach to get ahead of harmful AI practices, banning the most dangerous applications like social scoring, manipulative tech, and real-time biometric surveillance. As it applies to anyone whose AI affects EU residents, its global impact is undeniable. You can dive deeper into 2025 global privacy and AI security regulations to see how this fits into the bigger picture.

What Does the AI Act Cover?

The Act defines an "AI system" very broadly to keep it from becoming outdated. It essentially covers any software that uses techniques like machine learning or logic-based systems to generate outputs—like predictions, content, or recommendations—that influence the environment around them.

But it's not a one-size-fits-all law. The Act is smart about what it doesn't cover, carving out exceptions to avoid crushing innovation where the risks are low.

- Excluded Systems: AI systems built exclusively for military, defense, or national security purposes are out of scope.

- Research and Development: If a system is used purely for scientific R&D, it’s not subject to the rules. This protects the space for experimentation.

- Personal Use: An individual using AI for their own personal, non-professional reasons won't have to worry about compliance.

The EU AI Act’s approach is not to regulate AI technology itself, but rather its specific applications. This crucial distinction allows for a flexible, future-proof framework that targets real-world harm without unnecessarily burdening low-risk innovation.

Key Dates and Implementation Timeline

Getting compliant isn't a flip of a switch, and the EU AI Act is being rolled out in stages to give everyone time to prepare. Knowing this timeline is crucial for planning your compliance strategy. While the Act is officially law, its rules will become enforceable in waves.

Here are the key dates every business leader should have circled on their calendar:

- Mid-2025 (6 months in): The ban on "unacceptable risk" AI—think social scoring or manipulative systems—kicks in.

- Mid-2026 (12 months in): Rules governing general-purpose AI models, which power many of today's most popular tools, become active.

- Mid-2027 (24 months in): The full suite of rules for "high-risk" AI systems becomes mandatory for most applications.

- Mid-2028 (36 months in): All remaining obligations, including for certain high-risk systems already covered by other EU laws, are enforced.

This phased rollout is a clear signal: the time to start your AI compliance journey is now. The first deadlines are right around the corner, and preparing for the high-risk requirements takes a serious head start.

Navigating the Four AI Risk Categories

The EU AI Act is built on a simple but powerful idea: not all AI is created equal. Instead of a one-size-fits-all rulebook, the Act creates a risk-based pyramid. Think of it like regulating vehicles—a skateboard, a family car, and a freight train all have wheels, but they need vastly different safety rules based on their potential for harm.

This is exactly how the Act approaches AI. It sorts AI systems into four distinct tiers, each with its own set of rules and obligations. Figuring out where your AI fits into this structure is the first and most important step on your AI compliance journey under the EU AI Act.

Unacceptable Risk: The Red Line

At the very top of the pyramid, you'll find systems that pose an unacceptable risk. These are AI applications considered a clear threat to people's safety, livelihoods, and fundamental rights. The EU AI Act doesn't just regulate them; it bans them completely.

This category is reserved for AI that crosses a bright ethical line. A few examples of prohibited systems include:

- Government-run social scoring: Any system used by public authorities to classify people based on their social behavior or personal traits, which then leads to bad outcomes for them.

- Manipulative techniques: AI that uses hidden or deceptive tactics to warp a person's behavior in a way that could cause physical or psychological harm.

- Exploitation of vulnerabilities: AI built to take advantage of a specific group's age, physical disability, or tough economic situation.

- Real-time remote biometric identification: The use of things like live facial recognition in public spaces by law enforcement, except for in a few very specific, narrowly defined emergencies.

For any business, the message here couldn't be clearer: if your AI falls into this category, it has no legal path to the EU market. Period.

High-Risk AI: The Zone of Intense Scrutiny

Right below the banned tier is the high-risk category. These AI systems don't pose a threat to our way of life, but they could still have a major negative impact on someone's safety or fundamental rights. This is where the bulk of the EU AI Act's compliance work really kicks in.

We're talking about AI used in critical areas where a mistake could have serious consequences. The Act lists specific use cases in Annex III that are automatically considered high-risk, including AI systems that are safety components of products already regulated under EU law.

A key thing to remember is that for an AI to be classified as high-risk, it must fall into a pre-defined category and pose a significant risk of harm to health, safety, or fundamental rights. This two-part test makes sure the rules are targeted and don't bog down AI with less potential for harm.

Common examples of high-risk AI applications are:

- Medical Devices: Software that helps doctors diagnose diseases or recommends treatments.

- Critical Infrastructure: Systems that manage our water, gas, or electricity grids.

- Education and Vocational Training: AI that decides who gets into a school or grades student exams.

- Employment: Algorithms used to screen job applications or decide who gets a promotion.

- Law Enforcement: Tools used to evaluate the reliability of evidence or predict criminal activity.

Any organization developing or using these systems faces the most serious AI compliance duties. They must carry out conformity assessments, set up solid risk management processes, guarantee data quality, and keep extensive technical documentation ready for review.

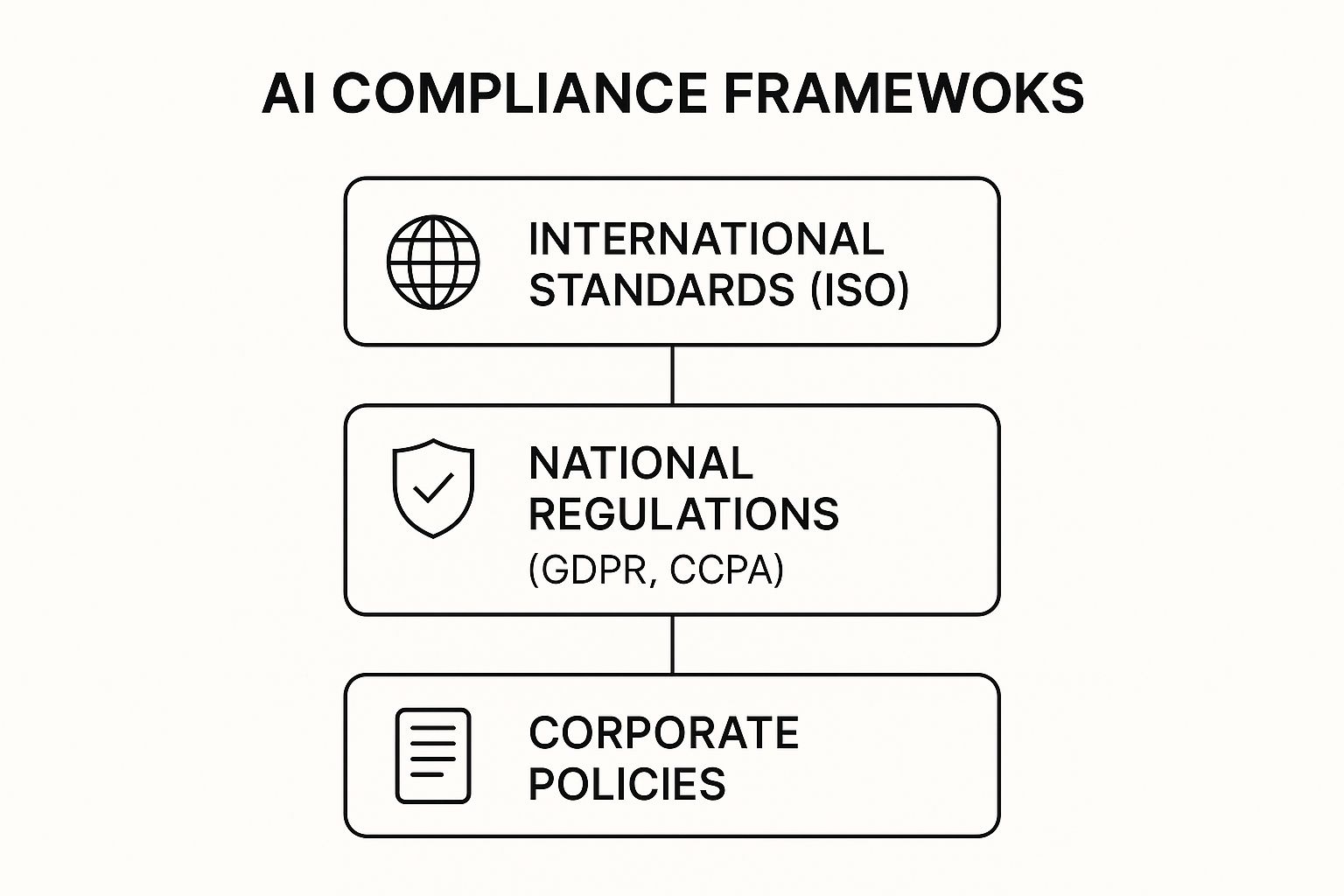

The image below shows how these regulatory frameworks, from global standards down to your own company's policies, create a layered approach to managing AI.

This hierarchy makes it clear that good AI compliance isn't about following just one regulation—it's about weaving these rules into every level of your operation.

To help you get a clearer picture of how these categories stack up, here’s a quick overview.

EU AI Act Risk Categories At a Glance

| Risk Category | Definition and Core Principle | Real-World Examples | Key Compliance Obligation |

|---|---|---|---|

| Unacceptable Risk | Poses a clear threat to fundamental rights and safety. The principle is prohibition. | Social scoring by governments, manipulative subliminal AI, real-time public biometric surveillance. | Outright ban. These systems cannot be placed on the market or used in the EU. |

| High-Risk | Could adversely impact safety or fundamental rights. The principle is strict oversight. | AI in medical devices, critical infrastructure, employment (hiring), and law enforcement. | Mandatory compliance with rigorous requirements for risk management, data governance, and documentation. |

| Limited Risk | AI that interacts with humans. The core principle is transparency. | Chatbots, deepfakes, and other AI-generated content. | Transparency obligation. Users must be informed they are interacting with an AI system. |

| Minimal Risk | All other AI systems with low potential for harm. The principle is innovation. | AI-powered spam filters, inventory management systems, and video game recommendation engines. | No mandatory legal obligations, but voluntary codes of conduct are encouraged. |

This table shows the "sliding scale" approach—the higher the risk, the stricter the rules.

Limited and Minimal Risk: The Lighter Touch

The final two categories come with much lighter requirements, which makes sense given their lower potential for harm.

Limited-risk AI systems are those that interact with people, and the main rule is just to be upfront about it. The whole idea here is disclosure. People have a right to know when they're talking to an AI, not a person. This bucket includes:

- Chatbots: Customer service bots must clearly identify themselves as automated.

- Deepfakes: Any AI-generated audio, image, or video content has to be labeled as artificial.

The compliance work here is simple: be transparent. There are no heavy documentation or risk management hoops to jump through.

Finally, we have the minimal-risk category, which covers the vast majority of AI systems out there today. These are applications like AI-powered spam filters, video game recommendation engines, or inventory management tools. The EU AI Act places no new legal burdens on these systems. While businesses are encouraged to adopt voluntary codes of conduct, it’s not a requirement. This "free pass" for minimal-risk AI is essential for letting innovation thrive where the stakes are low.

Your Action Plan for High-Risk AI Compliance

When an AI system is flagged as ‘High-Risk’ under the EU AI Act, the compliance game changes completely. The requirements become far more rigorous, moving beyond simple transparency into a world of deep, provable commitments to safety, fairness, and accountability. To get this right, you need a clear, structured game plan.

Think of it like preparing a commercial aircraft for flight. You don't just kick the tires and hope for the best. Instead, pilots follow a meticulous, documented checklist covering everything from engine performance to navigation systems. Your AI compliance plan for a high-risk system demands that same methodical approach—one that leaves nothing to chance.

This action plan breaks down the core pillars required by the EU AI Act. Consider it an essential checklist for any compliance officer or tech leader building a program that can withstand regulatory scrutiny.

Establish a Robust Risk Management System

First things first: you need a continuous risk management system. This isn't a one-time audit you can check off a list. It’s a living, breathing process that evolves with your AI system, from the initial design all the way to its eventual retirement. The goal here is to get ahead of potential harms by identifying, evaluating, and mitigating them proactively.

Your system needs to systematically analyze risks tied to the AI's intended purpose and even its potential misuse. This entire process must be documented and updated religiously, especially whenever the AI system is modified in any significant way.

Under the EU AI Act, risk management isn't just a good idea—it's a legal mandate. The system must be "a continuous iterative process" that runs throughout the entire lifecycle of a high-risk AI system, ensuring that risks are constantly monitored and addressed.

This constant vigilance is what keeps your compliance efforts strong, even as the tech and the world around it continue to change.

Conduct a Rigorous Conformity Assessment

Before your high-risk AI system can even touch the market, it has to pass a conformity assessment. This is the official verification process that proves your system meets every single requirement of the EU AI Act. It’s basically the AI equivalent of getting a new car safety-certified.

This assessment is a top-to-bottom review of your entire compliance framework. Regulators will put your risk management processes, data governance quality, and technical documentation under a microscope. A successful assessment is your ticket to market access.

Ensure High-Quality Data Governance

The performance of any AI is only as good as the data used to train, validate, and test it. For high-risk AI, the EU AI Act sets an incredibly high bar for data governance. You have to prove that your datasets are relevant, representative, and as free from errors and biases as you can possibly make them.

This boils down to a few critical actions: * Data Examination: Dig into your datasets to find potential biases that could lead to discriminatory outcomes. * Data Appropriateness: Make sure the data is directly relevant to what the AI is supposed to do. * Data Quality: Set up and document clear procedures for how you collect, label, and clean your data.

Weak data governance is one of the fastest ways to find yourself in hot water. Biased or flawed data directly creates a high-risk, non-compliant AI system.

Prepare Meticulous Technical Documentation

Finally, you must create and maintain comprehensive technical documentation. This is your official record, detailing every nut and bolt of your AI system for regulators. It has to be ready before the system goes live and kept current throughout its lifecycle. Think of it as the AI’s detailed blueprint and operational logbook all in one.

Regulators will be looking for this documentation to include: 1. General Description: A clear, plain-language overview of the AI system, its purpose, and its capabilities. 2. System Architecture: Detailed information on the models, algorithms, and data you're using. 3. Risk Management: The complete records of your risk assessments and the steps you've taken to mitigate them. 4. Conformity Assessment: All paperwork related to your successful conformity assessment. 5. Instructions for Use: Clear guidance for users to ensure they operate the system safely and as intended.

Trying to manage all this documentation can feel overwhelming. For more insight, you might find it helpful to read our guide on choosing the right software for compliance to help automate and simplify this critical task.

A Global View: AI Compliance Beyond the EU

While the EU AI Act is grabbing most of the headlines, it’s a mistake to think of it as a standalone event. The push for AI compliance is happening everywhere. Countries and industries across the globe are all wrestling with the same core questions: how do we govern AI safely and ethically?

Think of the EU AI Act as the first major skyscraper in a city that’s still under construction. Its design—its principles and its structure—is going to influence every other building that goes up around it. This ripple effect means that preparing for the EU AI Act isn't just about doing business in Europe. It's about getting your entire organization ready for a world where AI regulation is the standard.

The Financial Sector: A Canary in the Coal Mine

If you want to see this global trend in action, look no further than financial services. In a field that’s already heavily regulated, AI has become a fundamental tool for everything from algorithmic trading to loan approvals. This has put AI governance front and center for regulators and compliance officers.

The concerns they face are very real. How do you prove an AI-powered loan algorithm isn't biased? How can you be sure an AI trading model won't create systemic risk? These questions are forcing financial firms to get serious about AI compliance right now, giving the rest of us a preview of what’s coming to other industries.

According to a recent Investment Management Compliance Testing (IMCT) Survey, AI is now the number one compliance concern for investment management professionals. The survey revealed that 57% of respondents now prioritize monitoring AI usage. At the same time, a worrying 44% of firms already using AI tools have no formal compliance policies or testing procedures in place. You can read more about this in the full survey results on AI in investment compliance.

A Patchwork of Global Regulations is Taking Shape

Beyond just finance, countries are building their own approaches to AI governance, and many are taking cues from the EU's risk-based model. This is creating a complex, but interconnected, web of global regulations.

- United States: The U.S. is tackling this on a sector-by-sector basis. Federal agencies like the SEC and NIST are releasing guidelines, while states like California and Colorado are passing their own AI laws focused on things like automated decision-making and algorithmic bias.

- Canada: The proposed Artificial Intelligence and Data Act (AIDA) in Canada looks a lot like the EU's framework. It focuses on high-impact systems and requires businesses to take steps to identify and reduce potential harms.

- Brazil: Brazil's AI bill also uses a risk-based classification system and sets out rights for people affected by AI, showing a clear echo of the principles seen in the EU.

Building a compliance strategy for the EU AI Act gives you a solid foundation that you can adapt for these emerging global rules. The core requirements—like risk assessments, data governance, and solid technical documentation—are becoming the universal building blocks of trustworthy AI, no matter where you operate.

This growing consensus means that the effort you put into complying with the EU AI Act is a smart investment. It positions your organization as a leader in responsible AI, ready to navigate the evolving global rules with confidence. Meeting these standards isn’t just about avoiding fines anymore; it’s about building a trusted, sustainable AI practice for the future.

Your AI Compliance Questions, Answered

Jumping into AI compliance can feel like trying to read a map in a new language, especially with game-changing rules like the EU AI Act setting new standards for businesses everywhere. Let's clear up some of the most common questions and give you a practical sense of what this all means for you.

Who Does the EU AI Act Actually Apply To?

This is the big one, and the answer surprises a lot of people. The EU AI Act has what's called extraterritorial scope, which is a formal way of saying its rules have very long arms. It doesn't matter where your company is based; if you put an AI system on the market in the EU, you're on the hook.

So, if you're a startup in Silicon Valley or a tech firm in Tokyo selling an AI-powered tool to customers in Germany or France, you have to follow the Act's rules. The same goes for any company located inside the EU that is using an AI system.

What Are the First Steps My Company Should Take?

First things first: you can't manage what you don't know you have. Start by taking a full inventory of every AI system you're developing, using, or selling. Get it all down on paper.

With that list in hand, your next job is to sort each system into one of the EU AI Act's risk categories: Unacceptable, High, Limited, or Minimal.

Think of this risk classification as the bedrock of your entire compliance plan. It dictates everything that comes next, from needing to scrap a system entirely to simply adding a transparency notice. If you've got high-risk systems, your immediate priority is to run a gap analysis to see how your current practices stack up against the Act's tough requirements.

This initial assessment will give you a clear roadmap. If you want to dig deeper into building out your compliance strategy, you can find a ton of great resources on our AI compliance blog.

What Are the Penalties for Non-Compliance?

The EU isn't messing around here. The penalties for non-compliance are steep enough to get everyone's attention, and they're structured to fit the crime.

- Using AI for prohibited practices is the most serious offense. This can land you a fine of up to €35 million or 7% of your company's total worldwide annual turnover—whichever number is bigger.

- Failing to meet the rules for high-risk systems, among other violations, can result in penalties up to €15 million or 3% of your global turnover.

These aren't just slaps on the wrist; they're fines that make compliance a core financial priority for any business in the Act's orbit.

Does the Act Apply to Open-Source AI Models?

The EU has taken a pretty reasonable stance on open-source AI. For the most part, free and open-source AI components are exempt from the Act.

The big exceptions are if those components are being used in a high-risk system or fall into one of the prohibited AI categories. Additionally, developers of "general-purpose AI models" who release their work under an open-source license still have some homework to do. They're required to provide detailed summaries of the content they used to train their models, which maintains a level of transparency for everyone.

Getting through the maze of the EU AI Act doesn't have to be a nightmare. ComplyACT AI can get you compliant in just 30 minutes, helping you auto-classify your AI, generate the necessary technical documents, and be ready for any audit. Don't leave your business exposed—visit https://complyactai.com to secure your future.