Navigating the EU AI Act: Your Practical Guide

The new EU Artificial intelligence regulation, formally known as the AI Act, is a groundbreaking piece of legislation—the world's first comprehensive law for AI. It's setting a global precedent for how we manage the incredible pace of AI development while safeguarding our fundamental human rights.

A New Global Standard for AI Governance

Remember when the General Data Protection Regulation (GDPR) arrived? It completely changed the conversation around data privacy, not just in Europe, but worldwide. The AI Act is on track to do the exact same thing for artificial intelligence. This isn't just a rulebook for EU-based companies; its ripple effects are already being felt globally.

The legislation’s goal is to create a solid framework for trustworthy AI. It’s a delicate balancing act, really. On one side, it aims to boost innovation and let the AI sector flourish. On the other, it works to ensure the AI we use every day is safe, transparent, and respects our rights as individuals. The result is a more predictable legal environment where businesses can build with confidence and people can trust the technology that's increasingly a part of their lives.

Who Needs to Pay Attention

The Act’s authority doesn't stop at the EU’s borders. Its rules apply to any organization, anywhere in the world, that puts an AI system on the market in the EU or whose AI system impacts people within the EU. So, if your company offers an AI-powered product or service to European customers, you're on the hook for compliance.

This global reach, or "extraterritorial effect," makes getting to grips with the EU AI Act a top priority for businesses everywhere. The law uses a risk-based approach, sorting AI systems into different tiers, each with its own set of rules. Some applications are banned outright, while others simply need to meet transparency requirements. To dig deeper into these specifics, check out our comprehensive overview of the EU AI Act.

The EU AI Act is not just a regional policy but a blueprint for global AI governance. Its risk-based framework forces companies everywhere to re-evaluate how they design, deploy, and monitor AI to ensure safety and fairness.

The Legislative Journey

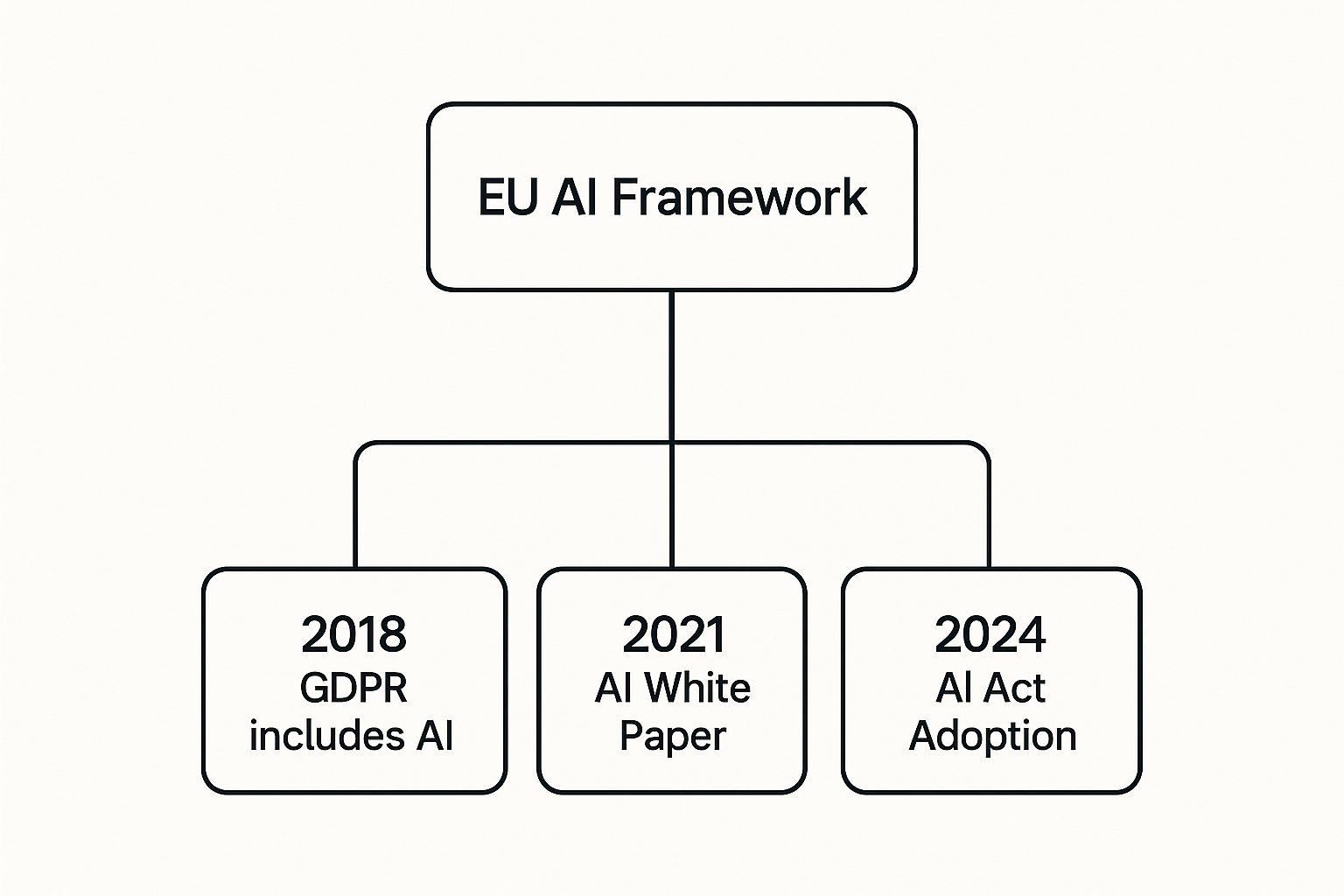

This regulation wasn't rushed. The path from proposal to law was a long and careful one. The European Commission first put forward the idea on April 21, 2021. After a period of intense negotiation and debate, the European Parliament gave its final approval on March 13, 2024.

Following the EU Council's endorsement, the Act was published in the Official Journal of the EU and entered into force 20 days later. However, the rules won't all hit at once. They're being rolled out in phases between 2024 and 2027, giving organizations a runway to prepare and adapt.

How the EU AI Act Classifies Risk

At the heart of the EU's new AI law is an intuitive, risk-based approach. The easiest way to think about it is like a pyramid. Instead of slapping the same heavy-handed rules on every type of AI, the Act wisely sorts them into four distinct risk tiers.

This tiered system ensures the regulations are proportional. The strictest, most demanding rules are aimed squarely at AI that poses a real threat to our safety and fundamental rights. Meanwhile, it gives innovative, low-risk AI the breathing room it needs to grow. Getting a handle on which category your AI system fits into is the absolute first step toward compliance.

If you need a deeper dive into what qualifies as different AI systems under these new rules, we have a guide that breaks it all down.

Unacceptable Risk: The Red Line

At the top of the pyramid are AI practices considered an unacceptable risk. These are so fundamentally at odds with EU values that they are simply banned outright. No exceptions. The law draws a very clear red line against any AI designed to manipulate human behavior or exploit vulnerabilities.

Some examples of what's completely off-limits include:

- Government-led social scoring: Systems that let public authorities grade people based on their behavior or characteristics are forbidden.

- Real-time remote biometric identification: Using live facial recognition in public spaces by law enforcement is banned, with very narrow exceptions for serious crimes.

- Subliminal manipulation: AI that alters someone's behavior in a way that could cause them or someone else physical or psychological harm.

These prohibitions are all about preventing a slide into a surveillance state and protecting our ability to think and act for ourselves, free from technological manipulation.

High-Risk AI: Strict Requirements

This is the category that gets the most regulatory attention. High-risk AI systems aren't banned, but they must comply with strict requirements to prove they are safe, transparent, and accountable before they can be sold or used in the EU.

These are the kinds of AI applications used in critical areas where a mistake or biased result could have devastating consequences for someone's life, livelihood, or safety.

We're talking about AI used in things like:

- Medical devices that help diagnose diseases or plan surgeries.

- Critical infrastructure, such as systems managing our water or power grids.

- Hiring and employee management, where algorithms screen résumés or decide who gets a promotion.

- Education, like AI that grades exams or determines who gets into a university.

The journey to this point has been a long one, as the EU has been methodically building its regulatory framework for years.

This visual really drives home that the AI Act wasn't a knee-jerk reaction. It's the result of a deliberate, multi-year effort to get this right. The risk-based classification is the core principle that holds the entire regulation together.

The AI Act is serious business. The bans on 'unacceptable risk' AI will take effect just six months after the law enters into force. For 'high-risk' systems, the compliance runway is longer—up to 36 months—but the requirements for rigorous assessments, transparency, and human oversight are substantial. And the penalties for getting it wrong? They're staggering, with fines reaching up to €35 million or 7% of a company's global annual turnover.

Limited and Minimal Risk: Lighter Touch

Finally, we get to the base of the pyramid, which is where the vast majority of AI systems will land. These categories get a green light for innovation, facing much lighter regulatory burdens.

Limited-risk AI covers systems that interact directly with people, like a customer service chatbot or an app that generates deepfakes. The main rule here is just simple transparency. You have to make it crystal clear to users that they are talking to an AI or that the content they're seeing is artificially generated.

Then there's minimal or no-risk AI. This includes things like AI-powered video games or the spam filter in your email. These applications are free to operate without any new legal strings attached under the AI Act because they pose virtually no threat to people's rights or safety.

To make this all a bit easier to digest, here's a quick summary of the four risk categories.

The EU AI Act Risk Categories at a Glance

| Risk Level | Description & Examples | Primary Obligation |

|---|---|---|

| Unacceptable | AI systems that violate fundamental rights and EU values. Examples: Social scoring by governments, real-time biometric surveillance in public spaces. | Banned |

| High | AI in critical sectors with significant potential for harm. Examples: Medical devices, hiring algorithms, critical infrastructure management. | Strict Compliance (e.g., risk assessments, data governance, human oversight) |

| Limited | AI systems that interact with humans and carry a risk of deception. Examples: Chatbots, deepfake generators. | Transparency (Users must be informed they are interacting with an AI) |

| Minimal | AI with little to no risk to citizens' rights or safety. Examples: AI in video games, spam filters. | No new obligations (Voluntary codes of conduct are encouraged) |

This table neatly lays out how the Act tailors its rules to the actual danger an AI system poses, ensuring that the burden always matches the risk.

Meeting the Demands for High-Risk AI Systems

If your AI system lands in the ‘High-Risk’ category, this is where the EU AI Act really sinks its teeth in. These systems aren’t outright banned, but they come with a strict set of rules. The goal is to make sure they're safe, transparent, and fair from the drawing board to deployment and beyond.

Think of it like getting approval for a new medical device. You can't just put a pacemaker on the market because you think it works. You need to prove it through rigorous testing, documentation, and quality control. The same logic applies here. High-risk AI requires a structured, evidence-based approach before it can legally touch the EU market.

The Blueprint for Compliance

Getting a high-risk system to the finish line is a methodical journey. It starts long before a single user interacts with it and continues with ongoing monitoring once it's live. These core requirements are essentially a series of non-negotiable checks and balances.

These aren't just friendly suggestions; they're mandatory hurdles. Getting over them means translating dense legal text into a clear, actionable plan for your development and governance teams.

Under the EU AI Act, providers of high-risk systems must establish a robust quality management system. This is your operational backbone for compliance, covering everything from risk management procedures and data governance to technical documentation and post-market surveillance. It’s the documented proof that you have control over your AI’s lifecycle.

Core Pillars of High-Risk AI Compliance

The regulation builds compliance on several key pillars. Each one is designed to address a potential point of failure, whether it's biased training data or the lack of a human "off switch."

- Risk Management System: This isn't a one-and-done task. You must constantly identify, evaluate, and mitigate risks throughout the AI's life. For example, a hiring AI needs regular audits to ensure new data isn't introducing discriminatory patterns.

- Data Governance and Quality: The data you use to train your model has to be relevant, representative, and as clean from errors and biases as you can get it. Building a diagnostic tool with data from only one demographic could have disastrous consequences for everyone else.

- Technical Documentation: You’ll need to create and maintain the system’s "blueprint." This document must detail the AI's purpose, how it works, its limitations, and why certain design choices were made. This is what regulators will be looking at.

- Record-Keeping and Logging: Your system must be able to automatically log events as it operates. This traceability is absolutely critical for figuring out what went wrong if an incident occurs and for understanding the "why" behind an AI's decision.

Wrangling all these requirements is a major task, and many organizations are finding they need specialized tools for the job. You can learn more about how to manage compliance with risk control software that helps automate a lot of this heavy lifting.

Human Oversight and Final Assessments

Beyond the technical nuts and bolts, the Act insists on keeping people in the loop. Effective human oversight means giving people the ability to monitor, understand, and, when necessary, step in and override an AI's decision. Think of a doctor who has the final say on a diagnosis suggested by an AI, or a factory worker with an emergency stop button for an autonomous robot.

Finally, before any high-risk system can go to market, it has to pass a conformity assessment. This is the final exam. It’s a formal verification that you’ve met all the legal requirements. Passing gets you a CE marking—the official stamp of approval that your system complies with EU standards and is ready for prime time.

A New Rulebook for General-Purpose AI Models

While the EU AI Act's risk pyramid neatly categorizes specific AI applications, the explosion of powerful, multi-talented models like those powering ChatGPT demanded a different approach. These General-Purpose AI (GPAI) models—often called foundation models—aren't built for a single job. They are chameleons, capable of being adapted for a staggering number of uses.

This incredible flexibility is their superpower, but it's also a regulatory headache.

Recognizing this, EU lawmakers created a separate, two-tiered framework just for GPAI. They knew a one-size-fits-all rule was a non-starter for a technology that could be used for anything from writing poetry to guiding a surgical robot. The focus here is on the original creators of the model, holding them accountable for their technology's capabilities, data, and limitations.

The Ground Rules for All GPAI Models

No matter how big or small, every GPAI model provider has to play by a core set of transparency rules. Think of this as the basic standard of care, ensuring that developers who build on top of these models have the information they need to do so safely and responsibly.

These aren't just suggestions; they are non-negotiable requirements for any GPAI model on the EU market.

The main obligations are:

- Detailed Technical Documentation: Providers have to create and maintain a comprehensive "biography" of their model. This includes how it was trained, what data it learned from, and the results of its testing and evaluation. It’s the AI equivalent of an ingredient list and instruction manual.

- Clear Information for Downstream Developers: They must give companies using their model the necessary instructions to comply with their own EU AI Act duties. It’s about passing the baton of responsibility correctly.

- Compliance with EU Copyright Law: Providers need a clear policy to show they respect EU copyright law—a hot-button issue given the vast amounts of web-scraped data used to train most large models.

This baseline ensures that even smaller, open-source models can't be a complete "black box." It creates a foundational layer of transparency across the board.

The EU’s strategy for GPAI is quite clever. It doesn't automatically label the model itself as high-risk. Instead, it places transparency duties on the provider, acknowledging that the real risk comes from how the model is actually applied in the real world.

Raising the Bar for Models with "Systemic Risk"

The regulation saves its strictest rules for the titans of the AI world—the most powerful foundation models that are deemed to pose “systemic risks.” A model earns this label if its training required an enormous amount of computational power, measured in floating-point operations (FLOPs). This number is essentially a proxy for a model's potential power and societal influence.

For the providers of these high-impact models, the compliance checklist gets a lot longer. This reflects their potential to shape economies, influence public opinion, and introduce new, large-scale risks.

These extra duties are all about getting ahead of potential problems. They include:

- Conducting Model Evaluations: Running the model through standardized tests to identify and mitigate any systemic risks it might pose.

- Performing Adversarial Testing: This is "red-teaming," where experts are hired to actively try and break the model or make it do harmful things. The goal is to find these flaws before bad actors do.

- Reporting Serious Incidents: If something goes wrong, they must report it to the European Commission's new AI Office.

- Bolstering Cybersecurity: Implementing top-tier security measures to protect these incredibly valuable and powerful models from being stolen or tampered with.

This two-tier system is the EU's pragmatic answer to a complex problem. It makes sure every foundation model comes with a basic level of transparency, while rightly placing the heaviest burden on the few massive models that could cause the most disruption.

How the EU AI Act Is Enforced

Let's be honest: a regulation is only as good as its enforcement. The EU Artificial intelligence regulation gets this, and it’s backed by a powerful, multi-layered system designed to ensure companies actually follow the rules. This isn't just a suggestion; ignoring the Act comes with some serious consequences.

Think of the enforcement structure like a team of referees in a high-stakes game. You have a central authority setting the standard, local officials in each country watching the play on the ground, and everyone is working from the same rulebook.

This coordinated approach is crucial. It ensures the Act is applied the same way across all 27 EU member states, so companies can’t just shop around for a country with weaker oversight to find a loophole.

The Key Enforcement Players

Enforcing the EU AI Act isn't a one-person job. Instead, it’s a network of different bodies, each with its own role, creating a solid system of checks and balances.

Here are the main players you need to know:

- The European AI Office: This is the new command center, operating from within the European Commission. Its main job is to oversee the rules for general-purpose AI models, coordinate actions across the EU, and publish clear guidelines to help everyone get on the same page.

- National Competent Authorities: Every EU member state will appoint its own "boots on the ground." These are the national bodies that will conduct market surveillance, investigate potential violations, and hand out penalties within their borders.

- The European Artificial Intelligence Board: Think of this board as the bridge connecting the national authorities and the central AI Office. It’s made up of representatives from each country (plus the European Data Protection Supervisor) and its role is to advise the AI Office and make sure the rules are being applied consistently everywhere.

The High Cost of Non-Compliance

The financial penalties for getting it wrong are steep. And that’s by design. The fines are structured to hit where it hurts, making compliance a much smarter financial decision than taking a risk.

The most serious violations—like deploying a prohibited AI system or ignoring data rules for high-risk AI—can result in fines up to €35 million or 7% of a company's total worldwide annual turnover, whichever is higher.

If that sounds familiar, it should. This penalty structure is even tougher than GDPR’s, which is a clear signal of just how seriously the EU is taking AI safety and the protection of fundamental rights.

The fines are broken down into tiers:

| Violation Type | Maximum Fine |

|---|---|

| Prohibited AI Practices (Article 5) | €35 million or 7% of global annual turnover |

| Non-compliance for High-Risk AI | €15 million or 3% of global annual turnover |

| Supplying Incorrect or Misleading Information | €7.5 million or 1.5% of global annual turnover |

These are eye-watering numbers. They make it pretty obvious that the cost of ignoring the rules will far outweigh the investment required to comply. And that’s before you even consider the reputational fallout. Being publicly shamed for breaching the world's most comprehensive AI regulation could be just as damaging to a business as the fine itself.

Your Compliance Timeline and Key Deadlines

Knowing what the EU AI Act says is one thing, but getting ready for its phased rollout is the real challenge. The law doesn't just flip on like a switch. Instead, its rules are staggered over 36 months, giving everyone a roadmap to follow as they get their systems and processes in order.

This rollout is intentional. It gets the most urgent rules in place first, while giving businesses a realistic amount of time to tackle the more complex requirements, especially those for high-risk AI. If you want to avoid a last-minute panic, you need to know these dates.

The First Six Months

The clock is officially ticking. The very first deadline comes up fast, and it’s focused squarely on AI systems that pose the biggest threats to our fundamental rights.

By 6 months after entry into force, the ban on unacceptable-risk AI systems becomes fully enforceable. This means any tech used for things like government-run social scoring or other manipulative practices outlawed by Article 5 has to be shut down. It's a clear signal from the EU: some lines just can't be crossed.

The One-Year Mark

Once the outright bans are in place, the focus pivots to the powerful models that are the engines behind so many of today's AI tools.

By 12 months after entry into force, the rules for General-Purpose AI (GPAI) models kick in. This is a big one. It brings in key transparency duties, like creating detailed technical documentation and proving you're respecting EU copyright law. This deadline goes right to the heart of the foundation models that have quickly become central to the entire AI ecosystem.

The EU AI Act’s timeline isn't just a list of dates; it's a strategic plan. It tackles the biggest risks first, with prohibitions taking effect in just six months, while giving organizations a full three years to meet the comprehensive demands for high-risk AI.

The Two-Year and Three-Year Deadlines

The final stages of the rollout are when the rest of the regulation comes online, building up to the comprehensive framework for high-risk systems.

By 24 months after entry into force, most of the EU AI Act will be in full swing. But there's a crucial extension for the most complex part. Organizations have until 36 months after entry into force to get everything right for their high-risk AI. This covers all the heavy lifting, like conformity assessments, solid risk management, and ensuring meaningful human oversight is in place.

The EU AI Act’s implementation is structured as a multi-stage process. To help you visualize the journey ahead, here’s a breakdown of the key milestones and what they mean for different types of AI systems.

EU AI Act Implementation Timeline

| Time After Entry into Force | Key Provisions Taking Effect | Applies To |

|---|---|---|

| 6 Months | Ban on prohibited AI practices (Article 5) | Systems with unacceptable risks |

| 12 Months | Obligations for GPAI models | Providers of General-Purpose AI models |

| 24 Months | Full application of the Act | Most AI systems (excluding high-risk) |

| 36 Months | Full compliance for high-risk systems | Systems classified as high-risk |

This timeline gives every organization a clear, step-by-step path to compliance. It ensures the most immediate dangers are addressed quickly while providing the necessary time to adapt to the more intricate requirements.

Answering Your Questions About the EU AI Act

As the EU's landmark AI regulation moves from a concept to a concrete reality, it’s natural to have questions. This law is a big deal, and its rules will be felt far beyond Europe’s borders, so it's smart to get a handle on what it means for your business.

Let's dive into some of the most common questions we're hearing from companies and developers. My goal here is to give you clear, straightforward answers to help you figure out where you stand and what to do next.

Does This Law Affect My Company if We're Not in the EU?

Almost certainly, yes. The EU AI Act was written with a very long reach, what lawyers call extraterritorial effect. This basically means its rules apply beyond the EU’s physical borders.

If you put an AI system on the market anywhere in the EU, or if people inside the EU are using the output of your AI, you're on the hook. For example, a US-based company selling an AI-powered hiring tool to a client in Germany has to play by the same rules as a European company. This global reach makes the AI Act required reading for any business with an international footprint.

We Need to Comply. Where Do We Even Start?

The very first thing you need to do is a simple inventory. Make a comprehensive list of every single AI system your organization builds, buys, or sells. This is the absolute foundation for figuring out your obligations under the new EU artificial intelligence regulation.

With that list in hand, you can start sorting each system into one of the Act’s four risk buckets:

- Unacceptable Risk: These are the systems that will be flat-out banned.

- High-Risk: These face the toughest and most demanding rules.

- Limited Risk: These just need to be transparent with users.

- Minimal Risk: These are largely free of any new legal requirements.

This internal audit is your roadmap. It tells you exactly where to focus your compliance efforts so you're not wasting time and resources.

It’s a huge misconception that this is only a "big tech" problem. The reality is that any organization using AI in a critical function—from a small startup doing credit scoring to a manufacturer with AI-driven machinery—needs to get a clear picture of what the EU AI Act requires of them.

How Do I Know if My AI Is Considered High-Risk?

This is the million-dollar question for most companies. Pinpointing whether your system is high-risk is probably the most critical step you'll take. Generally, an AI system gets this label if it's used as a safety component in a product that's already regulated under EU law—think medical devices, elevators, or even toys.

The Act also provides a specific list of high-risk use cases in what's called Annex III. If your AI is used for any of the following, it’s automatically considered high-risk:

- Recruitment, hiring, and managing employees.

- Determining access to education or vocational training.

- Credit scoring or assessing someone's creditworthiness.

- Managing critical infrastructure like water or electricity grids.

- Use in law enforcement or the justice system.

If your AI does any of these things, get ready for the Act’s strictest requirements. We're talking about intensive documentation, continuous risk management, and mandatory human oversight.

Getting your arms around the EU AI Act can feel overwhelming, but ComplyACT AI is built to make it manageable. Our platform can auto-classify your AI systems, generate the technical documents you need for an audit, and help you stay compliant in just a few minutes—all while protecting you from fines that can reach €35 million. See why industry leaders like DeepMind and Siemens trust us with their AI governance. Take your first step toward confident compliance at https://complyactai.com.