Your Guide to the EU AI Act and Compliance

The EU AI Act is a landmark piece of legislation. Think of it like GDPR, but for artificial intelligence. It's the world's first comprehensive legal framework for AI, setting the rules for anyone creating, selling, or using AI systems that impact people within the European Union. At its core, the Act uses a risk-based approach to balance innovation with protecting our fundamental rights.

Why the EU AI Act Changes Everything for Global Tech

With the EU AI Act, we're moving past abstract ethical guidelines and into the world of concrete legal duties. This isn't just another box to tick for European companies; it's a global blueprint that will reshape how AI is governed everywhere. If your organization touches AI in any way, getting to grips with this legislation is critical for your future.

The Act’s main goal is to build trust. It wants to make sure AI systems are safe, transparent, and don't trample on human rights. It does this by sorting AI applications into different risk levels and applying the toughest rules to the ones that pose the biggest threat. This approach is designed to encourage responsible innovation while shutting down AI practices that are simply too dangerous.

A New Global Standard for AI

Don't let the "EU" in the name fool you; this law's impact is global. The legislative journey started with a proposal way back in April 2021 and finally became official with its publication on July 12, 2024. Now, the EU AI Act is effectively setting the international benchmark. Companies all over the world will need to get on board if they want to operate in the massive EU market. You can track the entire legislative journey by checking out the detailed timeline of the EU AI Act.

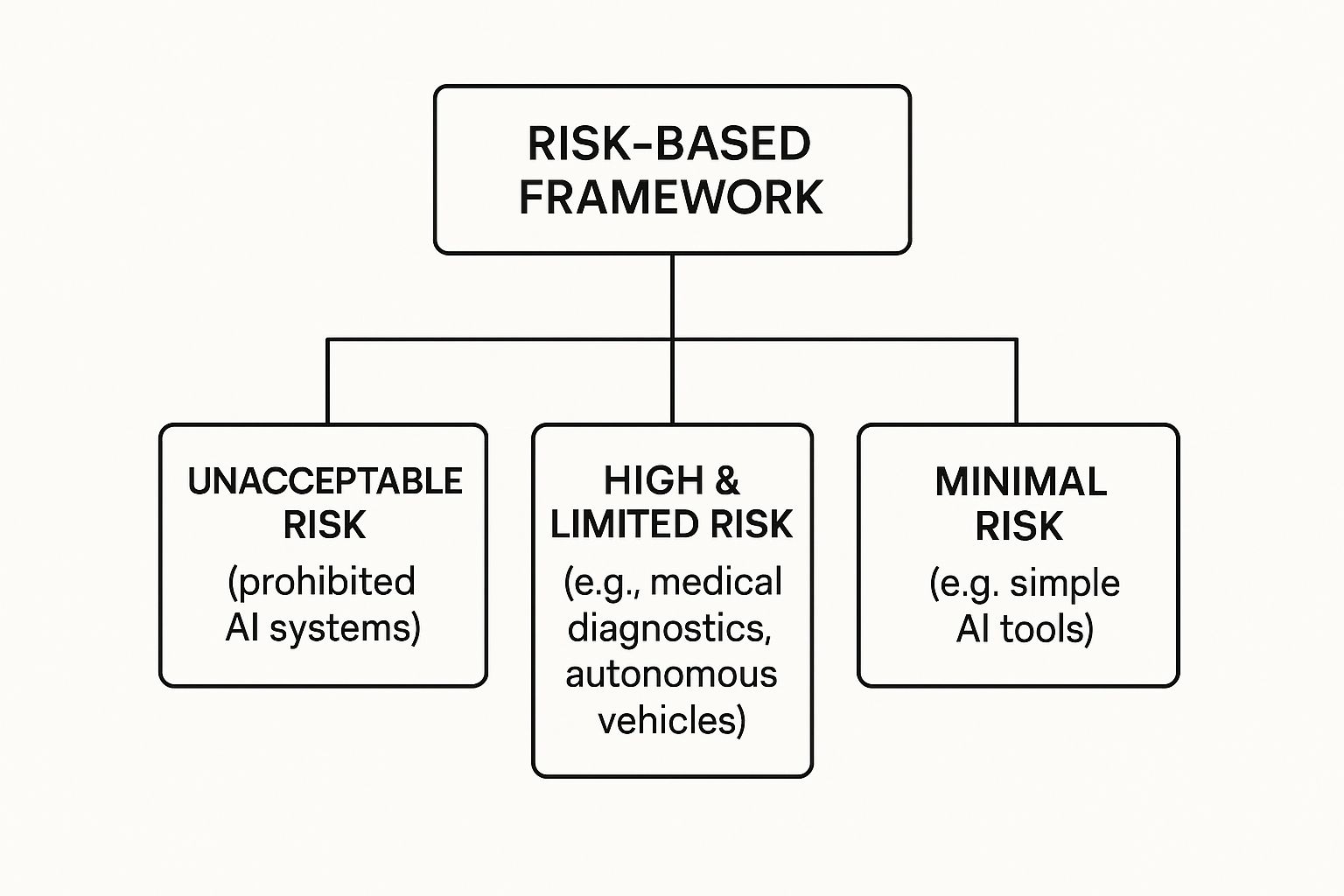

This visual from the European Commission’s own page captures the essence of the AI Act’s strategy.

As you can see, the aim is twofold: drive AI adoption while managing the associated risks. It’s all about creating an AI ecosystem that people can actually trust.

What This Guide Will Cover

Feeling a bit overwhelmed? Don't be. We've built this guide to give you a clear, practical roadmap for navigating the EU AI Act. We'll walk through every important piece, step-by-step, turning complex rules into actionable insights.

Here's what we're going to unpack:

- The Risk-Based Framework: We’ll break down the different risk categories and show you how to figure out where your AI systems fit.

- Critical Deadlines: You'll get a simple timeline showing when different parts of the law kick in, so you can plan ahead.

- Your Core Obligations: We'll dive into the specific rules you need to follow, especially for high-risk AI.

- The Cost of Non-Compliance: A look at the steep penalties for getting it wrong, which can be as high as €35 million.

Understanding the AI Act's Risk-Based Framework

The EU AI Act is built on a simple, powerful idea: not all AI is created equal. The regulation recognizes that an AI-powered spam filter and an AI system used in medical diagnostics pose vastly different levels of risk. So, instead of a one-size-fits-all approach, it creates a tiered framework that scales the rules to match the potential harm.

Think of it like a pyramid. The most dangerous, society-altering AI applications sit at the very top and face the strictest rules, including outright bans. As you move down the pyramid, the obligations become lighter. Getting this classification right is the absolute first step in your compliance journey. Misclassify your system, and you could be facing some serious penalties for non-compliance down the road.

The Four Tiers of AI Risk

The AI Act sorts AI into four distinct categories. It's a bit like a traffic light system: Red means stop (banned), Yellow means proceed with extreme caution (high-risk), and Green means you're largely good to go (minimal risk).

This structure is designed to focus the regulatory firepower where it's needed most—on the systems that could genuinely impact people's safety, livelihoods, and fundamental rights.

As the infographic shows, the vast majority of AI systems will fall into the lower-risk categories, leaving innovators free to experiment. But for those at the top, the rules are non-negotiable. Let’s break down what each tier really means.

EU AI Act Risk Tiers at a Glance

To make sense of the AI Act's structure, it helps to see the categories side-by-side. The table below provides a quick summary of the four risk tiers, what they cover, and the main regulatory expectations for each.

| Risk Level | Description & Examples | Regulatory Action |

|---|---|---|

| Unacceptable | AI systems that are considered a clear threat to people's safety, livelihoods, and rights. Examples: government-run social scoring, manipulative subliminal techniques, untargeted scraping of facial images from the internet. | Banned. These systems are prohibited from being used in the EU. |

| High | AI with the potential to significantly harm health, safety, or fundamental rights. Examples: AI in medical devices, resume-screening tools, credit scoring systems, and critical infrastructure management. | Strict compliance. These systems require rigorous testing, clear documentation, risk management, and human oversight before they can enter the market. |

| Limited | AI systems that pose a transparency risk. Users need to know they are interacting with an AI. Examples: chatbots, deepfakes, and other AI-generated content. | Transparency obligations. Providers must ensure users are aware they are dealing with an AI or that content is artificially generated. |

| Minimal/No | The vast majority of AI systems that pose little to no risk. Examples: AI-enabled video games, spam filters, and inventory management systems. | No new legal obligations. These systems can be developed and used freely, though voluntary codes of conduct are encouraged. |

This tiered approach ensures the regulation is targeted and proportionate, applying the brakes where necessary while allowing low-risk innovation to flourish.

Unacceptable Risk: The Red Lines

At the very top of the pyramid is the Unacceptable Risk category. These are the AI practices that the EU has deemed a fundamental threat to human rights and democratic values. As a result, they are completely banned.

The goal here is to prevent technology that is inherently manipulative, discriminatory, or invasive. Some of the most prominent examples include:

- Social scoring systems run by public authorities that judge people based on their behavior.

- Emotion recognition tools used in the workplace or at schools, with very few exceptions.

- AI that uses subliminal techniques to manipulate people into actions that could cause them harm.

These prohibitions are the first part of the Act to kick in, sending a clear message about the ethical lines the EU is not willing to cross. For a deeper dive, check out our guide on the full list of prohibited AI practices.

High-Risk AI: Proceed with Caution

The next level down is for High-Risk AI Systems. These aren't banned, but they are subject to the Act's most demanding rules. This is where the bulk of the compliance work really lies. A system is considered "high-risk" if its failure could seriously impact someone's health, safety, or basic rights.

The EU AI Act places the heaviest compliance burden on high-risk systems, requiring robust risk management, high-quality data governance, detailed technical documentation, and meaningful human oversight throughout the system's lifecycle.

Generally, high-risk AI falls into one of two buckets: 1. AI that is a safety component of a regulated product. Think AI systems used in cars, elevators, or medical devices that are already governed by EU safety laws. 2. Standalone AI systems used in sensitive areas. This is a specific list of use cases, including AI for biometric identification, managing critical infrastructure, making hiring decisions, or determining access to education.

Limited and Minimal Risk: The Green Light

Finally, we have the two largest categories at the base of the pyramid: Limited Risk and Minimal Risk.

Limited-risk systems, like a customer service chatbot or a tool that generates deepfakes, simply need to be transparent. You just have to make it clear to people that they're interacting with an AI or viewing AI-generated content. No complex compliance frameworks are needed.

The Minimal Risk category covers almost everything else—from AI-powered spam filters to inventory management software. For these countless applications, the EU AI Act imposes no new rules. The idea is to let innovation thrive where the risks are practically non-existent.

Navigating Critical Compliance Deadlines

Getting compliant with the EU AI Act isn't a single event with one big deadline. It’s a multi-year rollout, with critical milestones spread out over time. Think of it less like a sprint to a finish line and more like a carefully planned road trip with several essential stops along the way.

This staggered approach is by design. The EU is tackling the most urgent issues first while giving everyone else enough runway to prepare for the more complex obligations. The clock is already ticking, and understanding this roadmap is the first step toward a compliance strategy that doesn't end in a last-minute panic.

The Phased Rollout Explained

The logic behind the timeline is pretty simple: deal with the biggest risks first. The very first rules to kick in are for "unacceptable risk" AI systems—the stuff that’s now flat-out banned. This sends a loud and clear message about the ethical lines the EU is drawing in the sand.

After that, the focus shifts to the powerful, general-purpose models that are the foundation for so many other AI tools. Finally, the full, comprehensive set of rules for all other high-risk systems comes into play. This progression gives everyone in the supply chain, from the developers building the core models to the companies deploying them, time to get their house in order.

The EU AI Act's staggered timeline isn't just a list of dates; it’s a strategic rollout designed to tackle the most severe risks first, giving organizations a clear, albeit challenging, path toward full compliance. Waiting is not a viable strategy.

This tiered rollout means different teams in your organization might be on different schedules. For instance, a team working with a new General-Purpose AI (GPAI) model has a much earlier deadline than one using a more established, specialized high-risk system.

Your Actionable Compliance Timeline

To get ready, you need to know exactly which dates apply to you. The Act's deadlines are staggered over three years. For example, a key early date is August 2, 2025, which is when providers of new GPAI systems must be compliant. By August 2, 2026, the rules apply to operators of high-risk AI systems. A later deadline of August 2, 2027, covers operators of GPAI systems that were already on the market before the 2025 cutoff. For a deeper dive, you can explore a detailed breakdown of the EU AI Act timeline on dataguard.com.

Here’s a simplified breakdown of the major milestones you should have circled on your calendar:

- Phase 1 — Prohibitions on Unacceptable Risk AI: These rules are already in motion, banning systems like social scoring or manipulative AI. If you were doing anything in this category, you should have stopped already to avoid the harshest penalties.

- Phase 2 — Rules for GPAI Models: This next wave targets the developers of large, versatile AI models. It brings in requirements for technical documentation, transparency, and respecting copyright law during training.

- Phase 3 — Full High-Risk System Compliance: This is the big one. It’s when the complete set of obligations for high-risk AI systems comes into full force, including everything from risk management and data governance to human oversight and conformity checks.

Why You Cannot Afford to Wait

With some of these dates still a couple of years out, it’s tempting to put this on the back burner. That would be a huge mistake.

Becoming fully compliant, especially for high-risk systems, is a heavy lift. It means running detailed risk assessments, overhauling how you handle data, creating enormous amounts of technical documentation, and building new processes for human oversight. These aren't things you can knock out in a few weeks. Starting now gives you the time to find the gaps, assign the right people, and weave compliance into your development cycle—instead of trying to bolt it on at the eleventh hour. Being proactive is the only way you'll meet every deadline with confidence.

Meeting Your Obligations for High-Risk AI Systems

When your AI system gets flagged as high-risk under the EU AI Act, the compliance journey kicks into high gear. This is where the law gets serious about protecting fundamental rights and safety, laying down a set of strict, non-negotiable obligations. For these systems, it’s not enough to just be on the market; you have to prove they are safe, reliable, and fair across their entire lifecycle.

These requirements aren't just legal hoops to jump through. They're operational mandates that will reshape how your development, data science, and compliance teams work together. Think of it like building a house in an earthquake zone—you can’t just use standard materials and cross your fingers. You need a reinforced foundation, detailed architectural plans, and a rigorous inspection process before anyone can move in. The same principle applies here.

Let's break down the Act's complex legal language into the practical, day-to-day tasks you'll need to master.

Unpacking the Core Requirements

For high-risk systems, the EU AI Act is built on several foundational pillars. These aren't suggestions; they are mandatory components that have to be woven into your system from the very beginning. Getting these right is absolutely essential.

Here’s a look at the key obligations you must meet:

- Robust Risk Management: You need a continuous risk management system to identify, analyze, and shut down potential harms from the moment you start building to the day the AI is retired.

- Transparent Data Governance: The datasets you use for training, validation, and testing must be high-quality, relevant, and meticulously checked to remove discriminatory biases.

- Detailed Technical Documentation: You’ll need to create and maintain a comprehensive paper trail that proves your system meets every requirement, ready for authorities to inspect at a moment's notice.

- Meaningful Human Oversight: The system has to be designed so that a human can effectively step in to prevent or minimize risks.

- High Levels of Accuracy and Security: Your AI must perform reliably and be tough enough to withstand attempts to compromise its security or skew its performance.

Drop the ball on any one of these, and your entire compliance effort could be in jeopardy.

From Vague Concepts to Concrete Actions

The language in the EU AI Act can feel a bit abstract. What does "meaningful human oversight" actually look like in practice? It’s far more than just having someone watch a screen; it means building specific, functional controls into your AI.

For instance, it might be an interface that lets a human operator instantly override a decision made by an AI in a critical manufacturing process. It could also be as simple—yet crucial—as a big red "stop" button that can immediately halt the system if it starts behaving unexpectedly. These aren't add-ons; they are core design features.

Likewise, "transparent data governance" isn't just about using clean data. It means keeping a detailed log of where your data came from, how you curated it, and what steps you took to find and fix biases. This process is critical for ensuring your AI doesn't amplify existing societal inequalities, which is a huge concern for regulators.

The EU AI Act demands that high-risk systems are not 'black boxes.' Providers must be able to explain how their systems work, the data they were trained on, and the measures in place to ensure human control, turning abstract principles into verifiable, operational realities.

The Role of Conformity Assessments

Before any high-risk AI system can be sold in the EU, it must pass a conformity assessment. This is essentially a formal audit to verify that your system ticks all the legal boxes. Think of it as getting a safety certification for an electrical appliance—it’s proof that the product has been properly tested and is safe to use.

You generally have two ways to get this done:

- Internal Control: In many cases, you can perform the assessment yourself by following the detailed criteria laid out in the Act. This means meticulously checking every requirement against your system and compiling all the technical documentation to prove it.

- Third-Party Assessment: For certain "ultra-high-risk" systems, like those used for remote biometric identification, you have no choice. An independent organization, known as a Notified Body, has to conduct the assessment for you.

Whichever path you take, the goal is the same: a formal declaration that your AI system is compliant. But it doesn't stop there. You must also set up a post-market monitoring system to continuously collect data on the AI’s performance after it’s been deployed. This ongoing vigilance ensures it remains safe and effective over time and is a cornerstone of the Act's entire approach.

This is where tools like ComplyAct AI come in, helping to auto-generate the extensive technical documentation needed for these assessments and get you ready for audit season.

The Real Cost of Getting Compliance Wrong

The stakes of the EU AI Act are incredibly high, and they're not just about ticking a few technical boxes. This regulation elevates compliance from a back-office task to a critical business priority. Ignoring these rules isn't just a simple mistake—it's a direct threat to your revenue, your reputation, and your future. The penalties are deliberately severe to make sure every organization takes this seriously from the very beginning.

We’re not talking about a small slap on the wrist here. The regulation lays out a tiered fine structure that can reach truly devastating levels, making proactive compliance an essential survival strategy.

Fines That Can Cripple a Business

Let’s be clear: the EU AI Act’s penalties are designed to hurt. They intentionally echo the massive financial hits we've seen under GDPR, with fines directly tied to how serious the violation is. The worst offenses, naturally, draw the biggest penalties.

For major breaches, like using a prohibited AI system or refusing to work with regulators, the fines are staggering. A company could be on the hook for up to €35 million or 7% of its total worldwide annual turnover from the last financial year—whichever number is higher. That figure alone should tell you everything you need to know. Taking a gamble on non-compliance is a risk very few businesses can afford.

The EU AI Act frames compliance not as an administrative burden, but as a critical shield against catastrophic financial and reputational damage. The potential fines rival those of GDPR, making inaction an existential risk.

Even what might seem like smaller mistakes carry a heavy price. For instance, giving regulators incorrect or misleading information can trigger a fine of up to €7.5 million or 1% of global turnover. This layered approach ensures accountability everywhere, from the most serious violations down to procedural errors.

Understanding the Tiered Penalty System

Not all violations are created equal. The Act’s tiered penalty system links the fine directly to the offense, reserving the harshest consequences for actions that put people's fundamental rights and safety at risk.

Here’s a quick look at the fine tiers:

- Tier 1 (Highest Fines): Up to €35 million or 7% of global turnover. This is for the big stuff—using prohibited AI and failing to meet the data governance rules for high-risk systems.

- Tier 2 (Mid-Level Fines): Up to €15 million or 3% of global turnover. This covers other major obligations, like failing to carry out a proper conformity assessment on your AI.

- Tier 3 (Lowest Fines): Up to €7.5 million or 1.5% of global turnover. This tier applies when you provide incorrect, incomplete, or misleading information to the authorities.

These penalties hammer home the need for a solid approach to information technology risk management, where identifying and neutralizing regulatory threats is central to your strategy. For any company trying to find its footing in this new environment, the only smart move is to see compliance as an investment, not an expense. Because the real cost isn't in getting it right; it's in the permanent damage of getting it wrong.

Building Your Practical Path to Compliance

Knowing the rules of the EU AI Act is one thing. Actually putting them into practice is a whole different ball game. This is where theory hits the road, and the real work of building a compliance program begins. It can feel like a massive undertaking, but you can break it down into a straightforward, step-by-step playbook.

By tackling these tasks one by one, you’ll build a solid, proactive compliance program that has your organization ready for every deadline the EU AI Act throws at you.

Start with a Complete AI Inventory

First things first: you can't govern what you can't see. Your initial move must be to create a complete inventory of every single AI system your organization develops, deploys, or even just uses. And I mean everything.

This goes way beyond your main products. Think about the AI tucked away in third-party software you license, the models your marketing team uses for customer analytics, or the AI features in your HR platform that screen resumes. Every single one needs to be on the list.

For each system, you'll want to log what it does, the data it feeds on, and who supplies it. This inventory is the foundational map for your entire compliance journey.

Classify Each System within the Risk Framework

With your list in hand, the next critical task is to slot each system into the EU AI Act’s risk pyramid. This is probably the most important decision you'll make, as it dictates exactly which rules you need to follow.

Is a system prohibited, high-risk, limited-risk, or minimal? Don't guess. Be thorough and objective here, documenting precisely why you've classified a system a certain way by referencing the Act's specific criteria. A mistake here could mean wasting resources on unnecessary work or—much worse—facing massive fines for non-compliance.

Perform a Gap Analysis for High-Risk Systems

For any system you've flagged as high-risk, it's time for a gap analysis. This is simply a methodical process of comparing what you're doing now against the tough requirements laid out in the regulation.

The point of a gap analysis is to get an honest, clear-eyed view of where you are today versus where the EU AI Act demands you be. It shines a spotlight on your compliance weaknesses and becomes the blueprint for fixing them.

Your analysis needs to dig into several key areas:

- Risk Management: Do you have a process for continuously assessing and managing risks?

- Data Governance: Are your training datasets well-documented, relevant, and free from inappropriate biases?

- Human Oversight: Are there clear, effective ways for a human to step in and take control?

- Technical Documentation: What documents do you have, and what crucial pieces are missing?

Develop a Robust Governance Structure

Compliance isn’t a solo mission. It demands a strong internal governance structure where everyone knows their role. Who’s the ultimate owner of EU AI Act compliance? Who is tasked with managing the technical documentation, running the risk assessments, or handling incident reports?

Setting up this framework makes compliance a core part of how you operate, not just a frantic, one-time project. It’s also fundamental for implementing effective risk control software and building processes that keep your program humming along. A good structure gives people the authority and resources they need to enforce policies across the entire company.

Finally, you need to pull together all the required technical documentation. For high-risk AI, this is a heavy lift, but it’s absolutely non-negotiable. Following these steps gives you a clear, manageable path from simply understanding the law to confidently demonstrating your compliance.

Your EU AI Act Questions, Answered

As the EU AI Act starts to become a reality, it’s natural to have questions about what this all means for your business. Let's break down some of the most common ones we're hearing.

Does the EU AI Act apply to my business if we’re not based in Europe?

Yes, it almost certainly does. The EU AI Act was designed with a global reach, much like the GDPR.

The key question isn’t where your company is located, but where your AI is used. If you place an AI system on the EU market, or if its output is used within the EU, you need to comply. This makes it a critical piece of legislation for any tech company with a global footprint.

The Act’s global reach means compliance isn't just a European issue—it's the price of admission to one of the world's largest single markets. Non-EU companies will have to play by the rules or risk being locked out.

Are small businesses and startups exempt?

No, there’s no blanket exemption for small and medium-sized businesses (SMBs). However, the regulators understand that smaller companies have fewer resources.

To help, the Act establishes AI regulatory sandboxes. Think of these as controlled testing grounds where startups and SMBs can experiment with their AI systems under the guidance of authorities, working out the compliance kinks before a full public launch. The idea is to lower the barrier to entry without sacrificing safety.

What’s the deal with open-source AI models?

For the most part, the EU AI Act doesn't apply to open-source models, especially during the development phase. The goal here is to avoid stifling the innovation and collaboration that makes the open-source community so powerful.

But there are a couple of important exceptions. If an open-source model is integrated into a high-risk AI system or is used for a prohibited purpose, it falls under the Act's rules.

Providers of general-purpose AI (GPAI) models also have some transparency homework to do, even if their model is open-source. This includes things like providing a detailed summary of the data used to train the model.

Ready to make sure you’re prepared for every deadline and requirement? ComplyAct AI can auto-classify your AI systems, generate audit-ready technical documents, and get you compliant in under 30 minutes. Don't wait—see how we make it simple to avoid the risks of non-compliance.