EU AI Act High-Risk Systems Explained

When we talk about high-risk AI under the EU AI Act, it’s not about the technology itself being inherently dangerous. It's all about the context—how and where it’s used. This category is reserved for AI systems where a malfunction or a bad decision could seriously impact someone's health, safety, or fundamental rights.

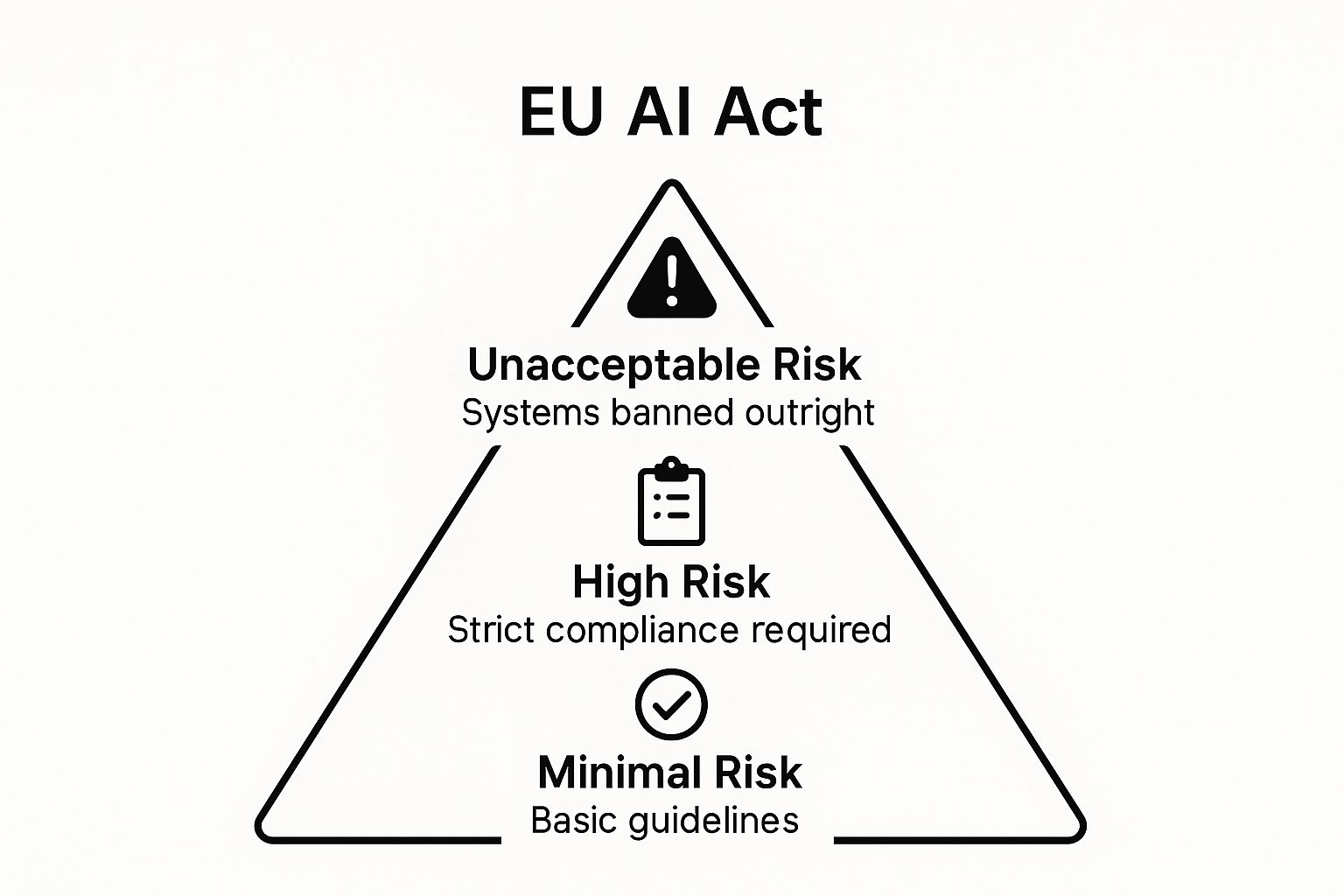

Understanding The EU AI Act’s Risk-Based Framework

The EU AI Act isn't a one-size-fits-all sledgehammer for regulating artificial intelligence. Think of it more like modern traffic laws. We don't ban cars, but we have different rules for different situations to keep everyone safe.

Certain actions, like driving the wrong way down a motorway, are flat-out banned because the risk is unacceptable. This is the very top of the EU AI Act’s risk pyramid.

At the very bottom—the massive base of the pyramid—you have minimal-risk systems like the spam filter in your email or the AI in a video game. These are like driving around in an empty car park. They require some basic transparency, but the rules are light.

The most important layer for businesses to understand sits right in the middle: the high-risk category. This is where the regulatory spotlight shines brightest. Consider these systems the commercial trucks and public buses of the AI world. They're incredibly useful, but because of the potential harm they could cause, they need a special license, strict safety checks, and regular inspections. This is the tier that demands your full attention.

The Four Tiers of AI Risk

The EU’s Artificial Intelligence Act sorts AI systems into four clear risk buckets: unacceptable, high, limited, and minimal. The pyramid below gives you a quick visual breakdown of how this works.

As you can see, the systems with unacceptable risk are banned entirely. But it's that crucial high-risk category where the toughest compliance work is concentrated.

The whole idea behind this framework is proportionality. The rules get stricter as the stakes get higher—especially when it comes to people's livelihoods, safety, and basic rights.

To make this even clearer, here's a quick summary of the different risk tiers.

The EU AI Act Risk Tiers at a Glance

| Risk Tier | Description & Examples | Regulatory Consequence |

|---|---|---|

| Unacceptable | AI systems that manipulate human behavior, exploit vulnerabilities, or enable social scoring by governments. | Outright Ban. These systems are considered a clear threat to fundamental rights and are prohibited. |

| High | AI used in critical infrastructure, medical devices, recruitment, law enforcement, or credit scoring. | Strict Obligations. Requires conformity assessments, risk management, human oversight, and registration. |

| Limited | AI systems that interact with humans, like chatbots or deepfakes. | Transparency Rules. Users must be informed that they are interacting with an AI or viewing AI-generated content. |

| Minimal | The vast majority of AI applications, such as AI-powered spam filters or video games. | No Specific Obligations. Companies can voluntarily adopt codes of conduct, but it's not required. |

This tiered approach ensures that the most rigorous oversight is applied exactly where it's needed most, without stifling innovation in lower-risk areas.

Why High-Risk Demands Your Focus

High-risk AI systems—the ones used in critical infrastructure, healthcare, or law enforcement—face a mountain of rules, from mandatory risk assessments to robust human oversight. They have to comply with detailed EU legislation and get registered in an EU-wide database before they can ever be put on the market.

The European Commission estimates this high-risk category will capture around 5% to 15% of all AI applications.

Getting your head around this framework is the absolute first step toward compliance. The Act isn't trying to stop progress; it's about making sure it happens responsibly. By figuring out where your AI systems land on this pyramid, you can start preparing for the specific rules that apply to you. To get the bigger picture, you can read our complete overview of the EU AI Act. This way, you can protect safety and fundamental rights without getting bogged down by unnecessary rules meant for much riskier tools.

What Qualifies as a High-Risk AI System

When the EU AI Act talks about "high-risk," it isn't judging how complex or sophisticated your algorithm is. The real focus is on the AI's intended purpose—what it’s actually doing in the real world and what could go wrong. The technology itself isn't on trial; its application is.

This classification boils down to two main pathways. Nailing down which path, if any, your system falls under is the most critical first step you can take. Get this right, and you'll save yourself a world of headaches and wasted resources later on.

The first route is pretty clear-cut. If your AI system acts as a safety component for a product that's already regulated by other EU laws, it's automatically flagged as high-risk.

Basically, if a product already needs a third-party safety check to get to market, and you embed an AI to handle a safety function, that AI gets the same high-risk label.

Pathway 1: Safety Components in Regulated Products

Think about all the products we use daily that are designed with safety as their number one job. Many of these fall under existing EU legislation, which the AI Act references in its Annex I (previously Annex II in earlier drafts).

Here are a few concrete examples of where this applies: * Medical Devices: An AI that analyzes CT scans to help a radiologist spot tumors. * Automobiles: The AI system that triggers a car’s automatic emergency braking. * Elevators: An AI that monitors and manages an elevator’s safety mechanisms to prevent accidents. * Toys: An interactive toy whose AI component has to meet strict child safety standards.

In each of these cases, the AI is essential for the product to operate safely. If it fails, someone's health, or even their life, could be on the line. Imagine an AI-powered sensor in a smart oven designed to prevent fires. If that oven is already regulated by something like the Radio Equipment Directive, its AI safety system is without a doubt a high-risk AI system.

Pathway 2: Specific High-Stakes Applications

The second pathway is less about the physical product and all about the context of its use. Annex III of the Act lays out a specific list of high-stakes situations where AI could drastically affect people's lives and fundamental rights. If your AI's purpose is on that list, it's high-risk. Period.

This list isn't set in stone—it can be updated. For now, it targets critical areas where a biased or faulty AI could do some serious damage.

The guiding principle is simple: when AI is making pivotal decisions about people's opportunities, safety, or access to essential services, it demands the highest level of oversight.

The categories spelled out in Annex III are broad, covering areas where fairness and accuracy are absolutely non-negotiable.

Key High-Risk Areas Listed in Annex III: * Biometric Identification: Systems for real-time and post-remote biometric identification. * Critical Infrastructure Management: AI that controls the flow of water, gas, heat, or electricity. * Education and Vocational Training: Systems that decide who gets into a program or how exams are graded. * Employment and HR: AI tools that screen résumés, shortlist job candidates, or influence promotion decisions. * Access to Essential Services: Algorithms that generate credit scores or determine who qualifies for public benefits. * Law Enforcement: AI used for individual risk assessments or as so-called "lie detectors." * Migration and Border Control: Systems that assess the security risk of people entering the EU. * Administration of Justice: AI used to help judges research or interpret the law.

Ultimately, context is everything. An AI that recommends movies on a streaming service is minimal risk. But if you take that exact same algorithm and use it to recommend prison sentences, it lands squarely in the high-risk zone. You can learn more about how to classify the different AI systems in our detailed guide. This fundamental distinction is the very foundation of the EU AI Act.

Your Core Obligations for High-Risk AI Systems

So, your AI system falls into the EU AI Act high-risk category. What now? This is where a whole new set of rules kicks in, and they're not just friendly suggestions. These are mandatory obligations designed to weave safety, fairness, and accountability into the very fabric of your technology.

Think of these requirements as the essential blueprints for building a trustworthy AI. It’s about moving beyond legal jargon and translating each rule into concrete, practical steps. This is your playbook for building compliance into your AI's DNA from day one.

Building a Robust Risk Management System

First things first: you need a continuous risk management system. This isn't a one-and-done audit. It's a living process that has to run for the entire lifecycle of your AI.

Imagine you're building a bridge. You don't just check the plans once and call it a day. You inspect the materials, stress-test the structure, and constantly monitor it for wear and tear after it opens to traffic. The same logic applies here. You need to identify any foreseeable risks, figure out their potential impact, and have solid measures in place to keep them in check.

This whole process has to be meticulously documented and revisited regularly, especially when the system gets an update or new real-world data uncovers a vulnerability you hadn't planned for.

Ensuring High-Quality and Unbiased Data

An AI system is only as good as the data it learns from. That's why the AI Act puts a massive emphasis on data governance, demanding that any datasets used for training, validating, and testing are top-notch.

So, what does that actually mean?

- Relevance and Representation: Your data has to be right for the job and diverse enough to avoid discriminatory results. A facial recognition tool trained mostly on one demographic is a recipe for a system that’s unfair and inaccurate for everyone else.

- Error-Free and Complete: Your data needs to be clean. You must have a process to spot and handle biases, gaps, and other inaccuracies.

- Appropriate Data Practices: You need to be able to show your work. That means documenting where your datasets came from, what they cover, and their main characteristics to prove you’ve done your due diligence.

This is about more than just cleaning a spreadsheet. It’s about thoughtfully designing a data pipeline that promotes fairness and accuracy from the very beginning.

The AI Act doesn't see biased data as an unfortunate side effect; it sees it as a critical design flaw. Your data governance strategy is your first and best defense against building a system that accidentally hard-codes societal inequality.

Creating Detailed Technical Documentation

You have to be able to explain exactly how your AI works. The Act mandates that you create and maintain detailed technical documentation that proves your system meets all the high-risk requirements.

Think of this documentation as the full set of architectural plans and engineering reports for a skyscraper. It has to be clear enough for national authorities to pop the hood and audit your system to verify its safety and compliance. It should cover everything from the system's capabilities and limitations to its algorithms, data sources, testing procedures, and the risk management steps you've taken.

For many teams, this will be one of the most demanding tasks. The goal is simple but challenging: get rid of the "black box" problem and make your AI’s inner workings transparent and accountable to regulators.

Guaranteeing Meaningful Human Oversight

Finally, every EU AI Act high-risk system has to be designed to allow for effective human oversight. This is way more than just having a person in the room. It means building real, functional controls that let a human being step in and take charge when needed.

This oversight isn't a single feature but a philosophy that can take a few forms:

- The Power to Intervene: A person must be able to jump in and override a decision made by the AI.

- The Power to Disregard: Users need the ability to simply ignore or reverse an output from the system if it doesn't make sense.

- The "Stop Button": Most importantly, there has to be a big red button—a way to immediately and safely shut the system down if it starts acting in unintended or dangerous ways.

For example, an AI used to screen résumés can't be the final judge. A human recruiter must be able to review the AI's shortlist, question its logic, and overrule its rejections. This ensures a person, not a program, is the ultimate gatekeeper to opportunity.

Real-World Scenarios of High-Risk AI in Action

The theory behind the EU AI Act high-risk category is important, but it's in the real world where the rules truly come to life. These aren't just abstract concepts for a far-off future; they're about systems already shaping our daily lives, often in ways we never see.

Let's ground this discussion in reality. By looking at a few concrete examples, you can start to see how the Act’s criteria apply in practice. It's the best way to spot similar high-risk systems in your own operations and understand why getting compliance right is so critical.

AI in Healthcare Deciding Patient Pathways

Picture an AI tool used in a hectic hospital emergency room. It's designed to analyze X-rays and MRIs, quickly flagging signs of conditions like cancer or neurological damage. A doctor then uses that output to decide on a patient's immediate treatment.

This is a textbook high-risk AI system. Why? The stakes couldn't be higher. A glitch, a biased algorithm, or a simple misinterpretation could delay a life-saving diagnosis or point to the wrong treatment, putting a person's health in direct jeopardy. This is exactly the kind of physical harm the Act is designed to prevent.

High-risk applications are especially common in sensitive fields like healthcare and finance. Think about patient triage systems, credit scoring models, and recruitment software—they all have the power to fundamentally alter someone's life. The Act's requirement to log these systems in a public EU database is all about pulling them out of the shadows.

If you're curious about your own tools, the EU AI Act Compliance Checker is a great starting point for an initial assessment.

Finance Algorithms Determining Access to Credit

Now, let's step into a bank. A complex AI algorithm sifts through thousands of data points to produce credit scores, which in turn decide who gets a mortgage, a car loan, or even just a basic credit card.

This is another clear-cut high-risk application. The system's decision has an immediate and profound impact on a person's financial life and their ability to achieve major life goals.

If the algorithm is flawed or trained on biased historical data, it could unfairly deny opportunities to entire demographic groups, perpetuating systemic inequalities and infringing on fundamental rights.

This is precisely what the EU AI Act targets: situations where an AI's judgment can either open or slam shut a critical door in someone's life.

HR Software Screening Job Applicants

Finally, think about the hiring process at a large corporation. An AI tool automatically scans thousands of resumes, looking for specific keywords, education, and past experience to create a shortlist for human recruiters.

This system is high-risk because it's a gatekeeper to employment. Its automated decisions directly affect a person's career and livelihood.

What if the AI was trained on the company's hiring data from the last decade? It might inadvertently learn to prefer candidates from specific backgrounds or universities, systematically filtering out equally qualified people without a human ever seeing their resume. The Act’s tough rules on data quality, transparency, and human oversight exist to stop these scenarios, making sure AI is a fair assistant, not an automated barrier.

Ultimately, these examples show that the EU AI Act high-risk label isn't just about the technology itself—it's all about context and consequence.

Navigating the Compliance and Enforcement Timeline

https://www.youtube.com/embed/tvSE7UjAuNw

The EU AI Act isn't a switch that gets flipped overnight. Instead, it’s a phased rollout, with different rules clicking into place over the next few years. For any organization working with EU AI Act high-risk systems, getting a handle on this staggered timeline is absolutely critical.

Think of it as a strategic roadmap. Knowing what's coming and when allows you to prioritize your compliance efforts instead of scrambling at the last minute. The clock is already ticking, and some of the most critical provisions are set to activate sooner than many realize.

Waiting until the final deadline is a dangerous game. The smart move is to use this period to get your house in order. That means starting now with the basics: taking stock of all your AI systems and running initial risk assessments. This isn't just about dodging fines; it's about building a solid, sustainable compliance framework from the ground up.

Key Deadlines and What They Mean for You

The enforcement dates are tiered, meaning different parts of the Act come into force at different times. For example, the prohibitions on banned AI practices have some of the earliest deadlines.

By mid-2026, the majority of the legislation will be fully active. This includes the heavy-duty requirements for EU AI Act high-risk systems, which will need to be in full compliance by this date.

For developers and providers, this timeline creates a clear window to get systems aligned. It means diving deep into the conformity assessment process, making sure your technical documentation is impeccable, and proving that your human oversight mechanisms are not just present but genuinely effective.

Regulators are sending a clear signal: compliance isn't a last-minute sprint. It's a marathon that starts today with a thorough audit and a concrete action plan.

The penalties for falling behind are designed to be a powerful motivator.

EU AI Act Enforcement Timeline Highlights

The journey to full compliance is broken down into several key milestones. This timeline provides a simplified overview of when major provisions of the Act will start applying, helping you map out your internal deadlines and allocate resources effectively.

| Compliance Deadline | Provisions Taking Effect | Action Required by Organizations |

|---|---|---|

| Late 2024 | Rules on prohibited AI systems become enforceable. | Immediately cease development and use of any AI systems falling under the "unacceptable risk" category. |

| Mid-2025 | Obligations for general-purpose AI (GPAI) models with systemic risk begin to apply. | Providers of powerful GPAI models must implement model evaluation, risk assessment, and cybersecurity measures. |

| Mid-2026 | Full enforcement of the AI Act, including all rules for EU AI Act high-risk systems. | Ensure all high-risk systems have completed conformity assessments, established risk management, and have full technical documentation. |

| Mid-2027 | The AI Act applies to high-risk systems that are components of regulated products (e.g., medical devices). | Align AI components with both the AI Act and existing product safety regulations (like MDR), ensuring seamless compliance. |

This timeline highlights the staggered nature of the rollout. While the full weight of the high-risk obligations lands in mid-2026, the groundwork needs to be laid well in advance to meet that deadline without a crisis.

The Steep Cost of Non-Compliance

Let's be blunt: ignoring the Act will be incredibly expensive. The fines for non-compliance can be as high as €35 million or 7% of a company’s total worldwide annual turnover—whichever figure is higher. These are not just slaps on the wrist; they are penalties that could seriously threaten a company's bottom line.

For example, the EU AI Act high-risk classification covers AI used in products already governed by strict EU safety laws, such as medical devices or critical car safety components. These systems must already comply with rules like the Medical Device Regulation (MDR). Failing to meet the AI Act's additional requirements on top of that can trigger these massive fines. You can learn more about the EU's detailed approach to high-risk AI systems in healthcare and life sciences.

This level of financial risk really drives home the need to start your compliance journey now. The longer you put it off, the more rushed and chaotic your efforts will be, which is a perfect recipe for expensive mistakes. By breaking the timeline into manageable steps and mapping your obligations to key dates, you can turn this overwhelming challenge into a planned, methodical process.

Your Action Plan for AI Act Readiness

Knowing the theory behind the EU's high-risk AI category is one thing, but putting that knowledge into practice is what really counts. It's time to shift from learning the rules to actually preparing for them. This is where you build a real-world plan to get your organization ready before the deadlines hit.

Think of compliance not as a bureaucratic checkbox, but as a blueprint for building AI that people can trust. Getting this right doesn't just keep you out of trouble with regulators—it gives you a serious edge. You'll be building a reputation based on responsible innovation, which is exactly what customers are looking for. Let’s get you started.

Kickstart Your Compliance Journey

The idea of starting can feel overwhelming, but it really just boils down to a few smart, initial moves. This isn't about solving everything at once; it's about taking the first critical steps to understand where you stand.

Appoint an AI Compliance Lead: First things first, someone needs to own this. Designate a person or a small team to be in charge of AI Act compliance. This gives you a clear point of accountability and ensures the project has a captain steering the ship.

Conduct a Comprehensive AI Audit: You can't manage what you don't know you have. Your next step is to create a complete inventory of every single AI system your organization uses, builds, or sells. Document exactly what each system does and why.

Perform an Initial Risk Classification: Go through your new inventory and screen each system against the Act's high-risk criteria. This is a preliminary check—the goal is to simply flag any system that might fall into the high-risk category for a much closer look later.

If there's one thing to take away, it's this: you need to start now. The deadlines are real, and the penalties for being caught unprepared are no joke. That initial audit and classification are the bedrock of your entire compliance strategy.

Build a Framework for the Future

With a clear inventory in hand, you can move on to the next phase: weaving compliance into the very fabric of how you operate. This goes way beyond just paperwork. It’s about creating a culture of proactive governance where responsible AI is the default, not an afterthought.

Your action plan from here should include: * Assess Data Governance Practices: Take a hard look at the data you're using to train your models, especially for those systems you flagged as potentially high-risk. Is it high-quality? Is it relevant? Most importantly, where might bias be hiding? * Develop a Documentation Strategy: The Act demands extensive technical documentation for high-risk systems. Start figuring out your process for creating and maintaining it. To make this less painful, you can look into specialized tools like risk control software that help automate much of the work. * Train Your Teams: Everyone needs to be on the same page. Make sure your developers, product managers, and legal teams all understand their specific responsibilities under the Act. A well-informed team is your best defense against accidental compliance slip-ups.

Frequently Asked Questions

Got questions about the EU AI Act's high-risk category? You're not alone. Let's break down some of the most common sticking points to give you a clearer picture of what these rules actually mean for your business.

Is My AI System High-Risk Just Because It Uses Machine Learning?

Not necessarily. What matters isn't the technology itself, but how you plan to use it. Think of it this way: the AI's intended purpose is the deciding factor.

An AI system only gets the high-risk label if it's used as a safety feature in a regulated product (like a sensor that stops a factory robot from causing harm) or if it's used for one of the specific purposes listed in Annex III—things like sorting job applications or deciding who gets a loan. A machine learning model that just optimizes warehouse inventory, on the other hand, would almost certainly be considered minimal risk.

What's the Very First Thing My Company Should Do to Get Ready?

Start by taking stock. Your immediate priority should be to create a detailed inventory of every single AI system you're involved with, whether you're building it, using it, or selling it.

For each system on your list, you need to clearly define and document its intended purpose. This internal audit is the bedrock of your compliance strategy—it will tell you which systems might fall into the high-risk bucket and guide every step you take from here on out.

Can We Change a High-Risk System to Make It Low-Risk?

Yes, in some cases, you can. Since the classification is all about the AI's intended use, changing its function or how it's deployed can sometimes shift its risk level.

Take an AI tool that automatically screens and shortlists job candidates—that's a classic high-risk scenario. But what if you tweaked it? Instead of making decisions, it now only suggests relevant keywords for recruiters to use in their search. A human is now making all the critical calls. This change could potentially de-classify the system, but you'll need to carefully document everything to prove the AI no longer has a major influence on the final outcome.

Does the AI Act Affect Companies Outside the EU?

Absolutely. This is a crucial point many non-EU companies miss. The EU AI Act has a long reach, extending far beyond European borders.

The Act applies to any provider who places an AI system on the EU market or puts a system into service within the Union, regardless of where that provider is based. It also covers users of AI systems located inside the EU.

Bottom line: if your AI system is used in the EU or its outputs affect people in the EU, you need to comply. It doesn't matter if your company is headquartered in New York, Tokyo, or anywhere else.

Feeling overwhelmed by the EU AI Act? We get it. ComplyACT AI is designed to guarantee compliance in just 30 minutes. Our platform helps you auto-classify your systems, generate the required technical documentation, and stay audit-ready. You can stop worrying about fines and get back to building great products. Learn how ComplyACT AI can protect your business.