Your Guide to the EU Artificial Intelligence Act

The EU Artificial Intelligence Act is a landmark piece of legislation—the world's first comprehensive law dedicated entirely to AI. Its goal is to make sure AI systems are safe, transparent, and don't trample on fundamental human rights. Essentially, it’s about building public trust so that artificial intelligence can grow in a healthy, responsible way.

Unpacking the EU Artificial Intelligence Act

AI is no longer a sci-fi concept; it's woven into the fabric of our lives. It helps decide who gets a loan, what medical diagnosis is likely, and even who gets a job interview. With stakes this high, the need for clear ground rules has become undeniable. The EU AI Act is Europe's answer.

This isn't about putting the brakes on innovation. Far from it. The real aim is to create a secure, ethical playing field where AI can thrive without putting people at risk.

Think of it like the safety regulations that govern cars. Before seatbelts, airbags, and crash tests became standard, the auto industry was still innovating—but the human cost was huge. The EU AI Act applies that same logic to AI, building a framework that protects citizens while nudging developers to build safer, more reliable technology. It's about getting ahead of the risks before they become widespread problems.

Core Mission of the Regulation

At its core, the EU Artificial Intelligence Act is trying to do two things at once: encourage the development of trustworthy AI while fiercely protecting the rights of EU citizens. It does this by creating a single, harmonized set of rules for all member states. This consistency is a huge deal—it simplifies the market for businesses and gives developers the legal clarity they need to invest and build with confidence.

The Act’s main objectives are pretty straightforward:

- Ensure Safety and Rights: To shield people from the potential harm that could come from a poorly built or maliciously used AI system.

- Build Public Trust: To foster widespread confidence in AI by making systems more transparent and accountable.

- Promote Innovation: To give developers clear, predictable rules so they can create new AI tools without legal uncertainty.

- Strengthen Governance: To establish a solid enforcement system that makes sure everyone follows the rules across the entire EU.

By setting these clear standards, the EU is effectively positioning itself as a global leader in ethical tech. The Act isn't just a set of rules; it's a blueprint for how to balance technological progress with human-centric values. The whole point is to ensure AI serves people, not the other way around.

How the Risk-Based Approach Works

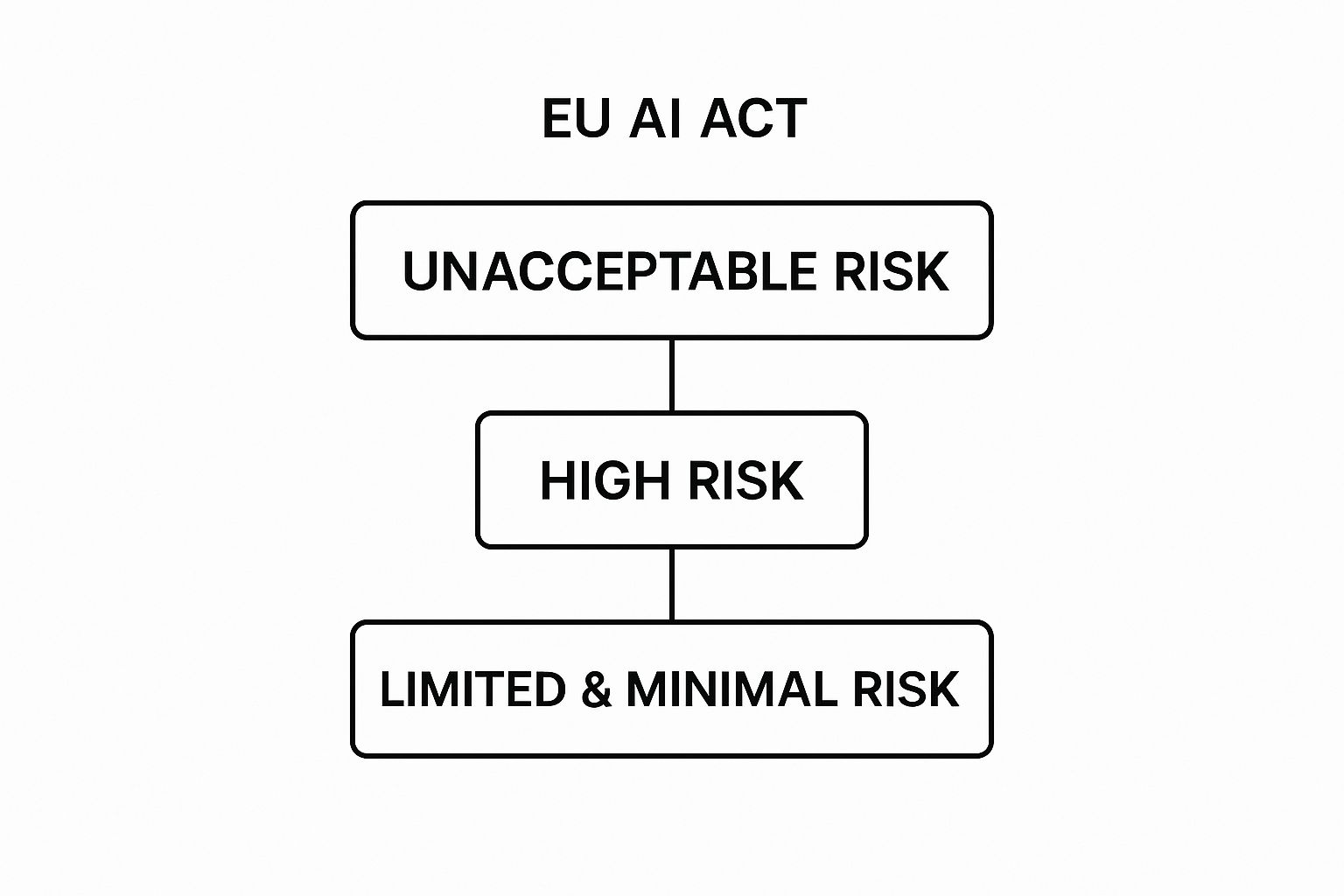

Instead of painting all AI with the same broad brush, the EU AI Act takes a much smarter, more practical approach. Think of it like a traffic light system. It applies the toughest, most restrictive rules only where the potential for real-world harm is highest, creating a tiered framework that forms the very heart of the legislation.

This structure is all about pragmatism. It focuses regulatory firepower on mitigating genuine dangers without stifling innovation in low-risk areas. It’s a sensible way to govern a technology as vast and varied as artificial intelligence.

At its core, the EU Artificial Intelligence Act classifies AI systems based on their potential to impact our safety and fundamental rights. For instance, any AI system designed to manipulate human behavior to cause harm or exploit vulnerabilities is simply banned. Then you have high-risk systems—those used in critical infrastructure, education, law enforcement, and hiring—which face a gauntlet of strict requirements covering data quality, documentation, human oversight, and transparency. You can get a deeper sense of these compliance hurdles from Cooley's analysis of the EU AI Act.

This diagram gives a great visual breakdown of the different risk tiers.

As you can see, the Act stacks the categories logically. Unacceptable risks are at the very top, followed by the heavily regulated high-risk tier, and finally, the much larger categories of lower-risk systems at the bottom.

To make this crystal clear, here's a simple table summarizing the four risk levels.

EU AI Act Risk Levels at a Glance

| Risk Level | Description and Examples | Key Obligation |

|---|---|---|

| Unacceptable | AI systems considered a clear threat to safety and fundamental EU rights. Examples include social scoring by governments or AI that manipulates and exploits vulnerable people. | Banned entirely. These systems are not allowed on the EU market. |

| High | AI that could negatively impact safety or fundamental rights. Think AI in medical devices, critical infrastructure, hiring processes, or law enforcement. | Strict compliance. Must undergo conformity assessments, risk management, and maintain human oversight. |

| Limited | AI systems that require transparency so users know they are interacting with a machine. Chatbots and deepfakes are prime examples. | Transparency. Users must be clearly informed that they are dealing with an AI system. |

| Minimal | The vast majority of AI systems, posing little to no risk. This includes things like AI-powered spam filters or video games. | No legal obligations. Voluntary codes of conduct are encouraged but not required. |

This tiered approach ensures that the rules are proportionate to the risk, letting developers of low-risk AI innovate freely while holding high-risk systems to a much higher standard.

Unacceptable Risk: Red Light

This category is a complete no-go. It covers AI systems seen as a direct threat to people's safety, livelihoods, and fundamental rights. Under the Act, these applications are banned entirely within the EU. No exceptions.

A classic example is a government-run "social scoring" system that grades citizens based on their behavior—a concept that runs completely contrary to EU values. Other prohibited AI includes:

- Manipulative AI: Systems built to subconsciously warp a person's behavior in a way that could lead to physical or psychological harm.

- Exploitative AI: Tools designed to take advantage of the vulnerabilities of specific groups, like children or individuals with disabilities.

- Real-time Biometric Identification: The use of remote biometric scanning (like facial recognition) in public spaces by law enforcement, except in a handful of very specific, pre-authorized situations.

High-Risk: Yellow Light

This next tier is for AI that, while not an "unacceptable" threat, could still seriously impact people's safety or fundamental rights. These systems aren't banned, but they have to proceed with extreme caution, meeting a long list of strict requirements both before and after they launch.

The guiding principle here is simple: the higher the risk, the stricter the rules. This ensures that powerful AI used in sensitive areas like healthcare or hiring is held accountable.

These systems must go through conformity assessments, implement robust risk management processes, and be trained on high-quality data. Solid information technology risk management is absolutely essential for any organization deploying these tools.

Examples of high-risk AI include:

- AI used as a safety component in products like self-driving cars or robotic surgery devices.

- Systems that determine access to education, like software used to score exams or screen university applications.

- AI used in employment for resume screening, performance reviews, or promotion decisions.

Limited and Minimal Risk: Green Light

The good news for most developers is that the vast majority of AI systems will fall into the bottom two tiers, which face far lighter rules. This is the "green light" category, designed to let innovation thrive with very little friction.

Limited Risk systems, like chatbots or tools that generate deepfakes, are mainly subject to transparency obligations. The crucial thing is that users must always know they're interacting with an AI or looking at synthetic content. This simple step prevents deception and helps people make informed decisions.

Minimal Risk applications cover everything else, from AI-powered video games to the spam filter in your inbox. These systems are considered to pose little to no danger and are completely free from any new legal obligations under the Act. While the EU encourages providers to adopt voluntary codes of conduct, it's not a requirement.

Navigating the Gauntlet of High-Risk AI Requirements

While the EU AI Act lays out several tiers of rules, the 'High-Risk' category is where the real work begins for most companies. This is where the regulation gets dense, the compliance burden is heaviest, and the stakes are sky-high.

Any AI system that lands in this bucket faces a tough set of obligations. These aren't just best practices; they are mandatory hoops you must jump through to ensure your system is safe, reliable, and fair from the drawing board to deployment.

For anyone building or using AI in sensitive fields, getting a firm grip on these rules is non-negotiable. Think of them as the blueprint for responsible innovation. Each requirement is a structural support meant to prevent harm, lock in accountability, and keep humans in the driver's seat.

What Puts an AI System in the High-Risk Category?

So, what exactly pushes an AI system into this high-stakes category? The Act has a two-pronged test.

First, a system is automatically considered high-risk if it acts as a safety component for a product already covered by existing EU safety laws. We're talking about things like medical devices, cars, elevators, or even children's toys—products where a failure could have immediate and severe consequences.

Second, and more broadly, the Act provides a specific list of use cases (Annex III) that are deemed high-risk by default. This is because of the serious potential for them to impact people's fundamental rights, safety, or livelihood.

This list covers a lot of ground, including AI systems used for: * Biometric identification and classifying people. * Managing critical infrastructure like our power grids and water supplies. * Education, such as scoring exams or deciding who gets into a university. * Employment, from resume-screening software to tools that decide who gets a promotion. * Accessing essential services, like the credit-scoring algorithms that approve or deny loans. * Law enforcement, including any tool that helps assess the reliability of evidence in a case.

If your AI fits any of these descriptions, you’re on the hook for the full set of high-risk compliance duties.

The Core Compliance Checklist for High-Risk AI

Fulfilling the obligations for high-risk AI isn't a simple "check-the-box" task. It's a deep, operational commitment that demands careful planning from day one. Here’s a rundown of the key pillars you'll need to build your compliance strategy on.

1. A Watertight Risk Management System This is your foundation. You are required to establish, document, and maintain a continuous risk management process. That means you need to be constantly identifying, evaluating, and mitigating any potential risks your AI could pose to people's health, safety, or fundamental rights. This isn't a one-time audit; it's an ongoing cycle. Using dedicated risk control software can be a huge help in juggling this complex process.

2. High-Quality Data Governance Garbage in, garbage out has never been more true—or more regulated. The data you use to train, validate, and test your AI models will be put under a microscope. The Act demands that your datasets are relevant, representative, and as free from errors and biases as you can make them. This means your data governance has to be top-notch.

3. Meticulous Technical Documentation Get ready to document everything. Before your AI system can hit the market, you must create and maintain a comprehensive technical file. This is your proof of compliance. It needs to lay out the AI's purpose, capabilities, limitations, and the specific design choices you made along the way.

The big takeaway here is that you need to be proactive about record-keeping. Regulators can ask you to demonstrate compliance at any time, which makes thorough, living documentation an absolute must.

4. Automatic Record-Keeping (Logging) High-risk systems have to be able to log their activity automatically while in use. These logs provide a crucial audit trail, ensuring you have the traceability needed to monitor the system's performance, investigate any strange behavior, and figure out what went wrong if an incident occurs.

5. Total Transparency for Users People need to know what they're dealing with. Your AI must be designed to be understandable and usable. That means providing crystal-clear instructions that explain its purpose, its accuracy levels, and any known risks. The goal is to give users enough information to interpret the system's output correctly and avoid misuse.

6. Meaningful Human Oversight This is a deal-breaker for regulators. High-risk AI can't be a black box that operates with total autonomy. You must design the system so that a human can effectively supervise it. This could mean having the power to intervene, override a decision, or simply hit the "off" switch if things go haywire. The level of oversight must match the level of risk, ensuring a human is always ultimately accountable.

Key Dates and Compliance Timelines

Understanding the EU AI Act is one thing, but knowing when its different rules actually start biting is a whole different ball game. This isn't a single, flip-the-switch event. The Act is being rolled out in carefully planned phases over several years, giving everyone time to get their house in order. But that also means you need a clear roadmap of the deadlines to know what to tackle first.

The journey from a proposal to a fully enforced law has been a long one. It all started when the European Commission first tabled the idea back on April 21, 2021. After a ton of debate and fine-tuning, the law officially entered into force in May 2024, following its formal adoption. That date fired the starting pistol on a 24-month transition period for most of the Act's requirements. If you're curious about the whole legislative saga, you can dig into the full timeline and all its stages to see how we got here.

Think of this timeline less as a list of dates and more as your strategic guide. It’ll help you figure out where to put your resources and when, ensuring you're ready for each new wave of rules.

The First Wave of Enforcement

The first big deadline is coming up fast. Regulators are going after the most dangerous stuff first—the AI applications deemed to have an ‘Unacceptable Risk.’ This initial push shows just how serious the EU is about getting clear threats to our fundamental rights off the market as quickly as possible.

Here are the first key dates to circle on your calendar in bright red ink:

- End of 2024 (6 months after entry into force): The outright ban on prohibited AI practices kicks in. Any systems used for things like government-led social scoring, manipulative "dark patterns," or exploiting the vulnerable have to be gone.

- Mid-2025 (12 months after entry into force): The rules for General-Purpose AI (GPAI) models become mandatory. This is a big one, as it targets the powerful foundation models that are the engine for so many other AI tools.

These early deadlines send a crystal-clear message. The EU is tackling the highest-risk and most foundational parts of the AI world first, setting a firm tone for what’s to come.

Getting compliant with the EU AI Act is a marathon, not a sprint. The staggered deadlines are your chance to methodically bring your AI systems into line, starting with the most urgent stuff and working your way through the rest.

The Main Compliance Deadlines

As we move further down the timeline, the rules expand to cover the huge category of high-risk AI systems and the rest of the Act's general requirements. These later deadlines give companies the breathing room they need to put complex technical and organizational changes in place, like building proper risk management systems and ensuring meaningful human oversight.

To make this easier to track, we've put together a table summarizing the key enforcement dates.

EU AI Act Compliance Timeline

This table breaks down the key dates when different parts of the EU AI Act become enforceable, helping you build a clear and practical compliance roadmap.

| Compliance Deadline | Applicable Rule | Who It Affects |

|---|---|---|

| Mid-2026 (24 months after entry into force) | General AI Act requirements. | Most AI system providers and deployers, particularly those whose systems aren't classified as high-risk. |

| Mid-2027 (36 months after entry into force) | Full obligations for High-Risk AI Systems. | Organizations developing or using AI in critical sectors like healthcare, hiring, finance, and law enforcement. |

The longer runway for high-risk systems is a practical acknowledgment of just how much work is involved. Meeting the strict standards for data governance, technical documentation, and official conformity assessments takes time and serious investment.

By mapping out this entire compliance journey, you can build a step-by-step plan that avoids that last-minute panic and helps your organization navigate this new regulatory world with confidence.

Understanding the Penalties for Non-Compliance

The EU AI Act isn't just a set of recommendations; it has real teeth. The penalties for getting it wrong are designed to make even the largest global companies sit up and take notice. Ignoring these rules isn't a minor slip-up—it's a major financial and reputational gamble.

Much like the GDPR, the Act’s fines are structured to hurt. They're often calculated as a percentage of a company's total worldwide annual turnover, ensuring the punishment scales with the size of the business. The message from Brussels is loud and clear: the cost of non-compliance will dwarf the investment needed to get it right from the start.

The Tiers of Financial Penalties

The fines aren't a one-size-fits-all punishment. Instead, they’re tied directly to the risk-based framework we've already covered. The most serious violations—those that pose the greatest threat to people's rights and safety—come with the most severe consequences.

Here's how the penalty brackets break down:

- Prohibited AI Practices: This is the top tier. If you violate the ban on unacceptable-risk AI systems (think social scoring or manipulative tech), you're looking at a massive fine. The penalty can go up to €35 million or 7% of your company's global annual turnover, whichever is higher.

- High-Risk System Violations: Failing to meet the strict obligations for high-risk AI is also a costly mistake. Forgetting to conduct conformity assessments or ensure proper human oversight can trigger fines of up to €15 million or 3% of global turnover.

- Providing Incorrect Information: Don't even think about misleading the authorities. Supplying false or incomplete information to regulators comes with its own hefty price tag: up to €7.5 million or 1.5% of global turnover.

This tiered system reinforces the core principle of the EU Artificial Intelligence Act: the bigger the potential for harm, the bigger the accountability.

The EU AI Act's penalty framework is a clear signal that regulators are moving beyond guidance and into enforcement. For any organization deploying AI, understanding these financial risks is the first step in building a strong business case for proactive governance and compliance.

Beyond the Financial Impact

While those multi-million euro fines are what grab the headlines, the damage from non-compliance goes much deeper. The blow to your reputation can be even more devastating than the financial hit.

Imagine being publicly named for violating a law designed to protect fundamental human rights. That kind of news can shatter customer trust overnight, scare off partners, and make it nearly impossible to attract top talent.

On top of the fines, national authorities can also force you to pull a non-compliant AI system from the market entirely or recall it from existing users. This could derail your operations, torpedo a business model you've spent years building, and turn a major investment into a sunk cost. The takeaway is simple: compliance isn't just about avoiding fines; it's about building a sustainable, trustworthy business.

Getting Your Business Ready for the EU AI Act

With the rules of the EU Artificial Intelligence Act now set in stone, the time for hypotheticals is over. It’s time to act. Getting ready for this regulation isn't just a job for the legal team—it demands a coordinated effort from every corner of your organization. The deadlines are closer than they appear, and the work you do now will save you from a major headache later.

Think of it like getting a ship ready for a long journey. You wouldn't just check the engine. You’d inspect every part of the vessel, chart your course, and make sure your crew knows exactly what to do. AI compliance is the same: you need to take stock of all your systems, understand the new regulatory waters, and have a skilled team ready to navigate.

It all starts with one simple, but fundamental, question: What AI are we actually using?

Start With a Full AI System Inventory

You can't manage what you don't know you have. Your first concrete step is to create a complete inventory of every single AI system your company develops, uses, or buys from a third-party vendor. This is the bedrock of your entire compliance strategy.

This can't be just a simple spreadsheet. For every system, you need to dig into the details to understand its purpose and potential impact.

Your AI inventory should capture: * System Name and Purpose: What do you call it, and what business problem does it solve? * Data Sources: What data feeds the system? Where does it come from, and how is it used for training and operation? * Decision-Making Impact: Does the AI make or influence important decisions about people’s lives? Think hiring, credit approvals, or even medical diagnoses. * Vendor Information: If you bought it off the shelf, who is the provider? What have they said about their own compliance?

This audit process often uncovers "shadow AI"—tools that teams started using on their own without any formal approval. Finding these hidden systems is absolutely critical to avoid major blind spots.

Classify Each System’s Risk Level

Once you know what you have, you need to figure out where each system fits within the EU AI Act’s risk framework. This is the classification step, where you’ll sort your AI into one of four buckets: unacceptable, high, limited, or minimal risk.

This is arguably the most important part of the process. If you misclassify a high-risk system as minimal, you could be setting yourself up for some of the law’s steepest penalties.

This isn't just a box-ticking exercise. It requires context. For example, an algorithm used in hiring is automatically considered a high-risk application under the Act. The goal is to map every system you use against the specific definitions laid out in the regulation.

For any system that looks like it could be high-risk, you must kick off a formal risk assessment. This means identifying any potential harm it could cause—to fundamental rights, health, or safety—and documenting exactly how you plan to manage and reduce those risks.

Build Your Governance Framework

Complying with the EU AI Act is a marathon, not a sprint. To handle this long-term, you need a solid governance framework and a dedicated team to manage it.

This team should be cross-functional, pulling in people with different perspectives from across the business. A typical AI governance team includes leaders from: * Legal and Compliance: To interpret the law and keep everyone on track. * Technical and Data Science: The people who actually build and understand the systems. * Business Operations: To see how these changes will affect products and customers. * Cybersecurity: To keep the AI systems and their data secure.

This group will own the process—creating policies, managing documentation, and monitoring systems after they go live. Strong training and change management is vital to make sure everyone in the company knows what’s expected of them. You can learn more by reading our guide on implementing successful change management programs.

By taking these proactive steps, you can build a compliance program that doesn’t just avoid fines but also earns the trust of your customers and partners.

Your Questions About the EU AI Act, Answered

As the EU Artificial Intelligence Act gets closer to becoming fully enforceable, the questions are piling up. Let's tackle some of the most common ones to clear up the confusion around this landmark regulation.

Does the EU AI Act Apply to Companies Outside the EU?

Yes, it absolutely does. Just like the GDPR, the AI Act has a long reach. If you put an AI system on the market in the EU, or if its output is used there, you're on the hook—no matter where your company is headquartered.

Think of it this way: a US-based company selling a high-risk AI-powered hiring tool to a client in Germany must follow every rule. The moment your AI's impact crosses into the EU, the Act applies. This global scope is a deliberate move to ensure everyone playing in the EU market adheres to the same high standards.

What Is a General-Purpose AI Model Under the Act?

A General-Purpose AI (GPAI) model is exactly what it sounds like—an AI with wide-ranging abilities that can be adapted to many different jobs. We're talking about the big foundational models here, like the large language models (LLMs) behind chatbots or the systems that generate images from text.

The EU AI Act singles them out because so many other AI tools are built on top of them. Providers of these GPAI models have their own set of rules, mainly centered on transparency. They need to create detailed technical documentation and share clear summaries of the data they used for training. If a model is powerful enough to be deemed a "systemic risk," the obligations get even tougher.

The Act treats GPAI models as a special case because of their foundational role. A single flaw or hidden bias in a popular GPAI model could create a massive ripple effect, showing up in the hundreds or thousands of different applications that rely on it.

Are Open-Source AI Models Exempt?

It’s complicated, but the short answer is: not always. The EU AI Act does include some exemptions for open-source models, trying not to kill the spirit of collaborative innovation. But there are big exceptions to this exemption.

The free pass disappears if an open-source model is used in a commercial product, or if the model itself falls into the high-risk or unacceptable-risk categories. For instance, a university research team could release an open-source AI component without much regulatory headache. But if a company takes that exact same component and builds it into a commercial medical device, that product has to meet all the demanding requirements for high-risk AI. It's all about the context.

Trying to get your head around the EU Artificial Intelligence Act can feel overwhelming, but ComplyACT AI makes it straightforward. Our platform can automatically classify your AI systems, generate the necessary technical documentation, and get you audit-ready in about 30 minutes. You can avoid the stress of massive fines and keep up with regulatory demands. Visit the ComplyACT AI website to see how we can lock down your compliance.