EU Artificial Intelligence Act Summary Guide

The EU AI Act is, at its heart, the world's first comprehensive rulebook for artificial intelligence. It works by sorting AI systems based on the level of risk they might pose. Think of it like this: the rules for making a sandwich are a lot less strict than the rules for manufacturing a car engine. The AI Act applies that same common-sense logic to technology, all to keep people safe without stifling innovation.

What Is the EU AI Act?

The EU AI Act is a landmark regulation that sets the ground rules for how artificial intelligence is developed, sold, and used across the European Union. Its main purpose is to make sure AI technologies are safe, transparent, and don't trample on fundamental human rights. At the same time, the Act is designed to give businesses clear legal guidelines, which helps encourage investment and cement Europe's position as a hub for trustworthy AI.

Instead of a one-size-fits-all approach, the Act uses a practical, risk-based framework. This means the intensity of the rules an AI system must follow is directly proportional to the potential harm it could cause. This structure is key—it allows for creative freedom in low-risk applications while locking down systems that could impact people's safety or fundamental rights.

A Pioneering Legal Framework

The EU Artificial Intelligence Act (often just called the AI Act) is a true first. It's the first major legal framework on the planet built specifically to regulate AI. The goal is to create a single, harmonized set of rules for all 27 EU member states, putting human well-being at the center of AI governance.

The Act was formally adopted by the European Council on May 21, 2024, and it's not just a local affair. This regulation has a long reach—its rules apply to any company that puts an AI system on the EU market, even if they aren't based there. If your AI's output is used in the EU, you need to pay attention. This global scope makes understanding the Act a business necessity for companies everywhere. For a deeper dive, you can track the official Artificial Intelligence Act developments.

At its core, the EU AI Act is all about building trust. By establishing clear standards and rules of the road, the legislation aims to give people confidence in AI technologies while giving developers the predictability they need to build responsibly.

To give you a clearer picture, here's a quick breakdown of the Act's foundational elements.

Key Pillars of the EU AI Act at a Glance

| Component | Brief Explanation |

|---|---|

| Primary Goal | To ensure AI systems are safe, transparent, and respect fundamental rights. |

| Main Mechanism | A risk-based approach, where rules are tied to an AI's potential for harm. |

| Geographic Scope | Applies to any AI system placed on the EU market, regardless of where the provider is based. |

| Risk Tiers | Unacceptable, High, Limited, and Minimal Risk. |

| Enforcement | Non-compliance can lead to significant fines, up to €35 million or 7% of global turnover. |

This table shows how the Act is structured to both protect citizens and create a stable environment for businesses to grow.

The Dual Goals of Safety and Innovation

The AI Act is a careful balancing act. On one side, it fiercely protects the safety and fundamental rights of individuals. On the other, it aims to strengthen the EU’s position as a tech leader and boost its economic competitiveness.

This dual focus is woven throughout the regulation by:

- Banning Unacceptable Risks: Certain AI applications are banned outright because they pose a clear threat to people. Think of things like government-run social scoring or manipulative systems that exploit vulnerabilities.

- Regulating High-Risk Systems: Strict, heavy-duty requirements apply to AI used in critical fields where the stakes are high, such as healthcare, employment decisions, and law enforcement.

- Ensuring Transparency: For other systems, the focus is on clarity. The law mandates that users must be told when they're interacting with an AI, like a chatbot, or viewing AI-generated content like a deepfake.

- Allowing Minimal Risk: The vast majority of AI applications—like AI-powered spam filters or the AI in video games—are considered minimal risk and can operate with few or no new rules.

Navigating the Four AI Risk Categories

The EU AI Act’s core idea isn't to wrap all artificial intelligence in the same red tape. Instead, it takes a much smarter, risk-based approach. Think of it like safety regulations for vehicles: the requirements for a passenger jet are worlds apart from those for a bicycle because the potential for harm is fundamentally different.

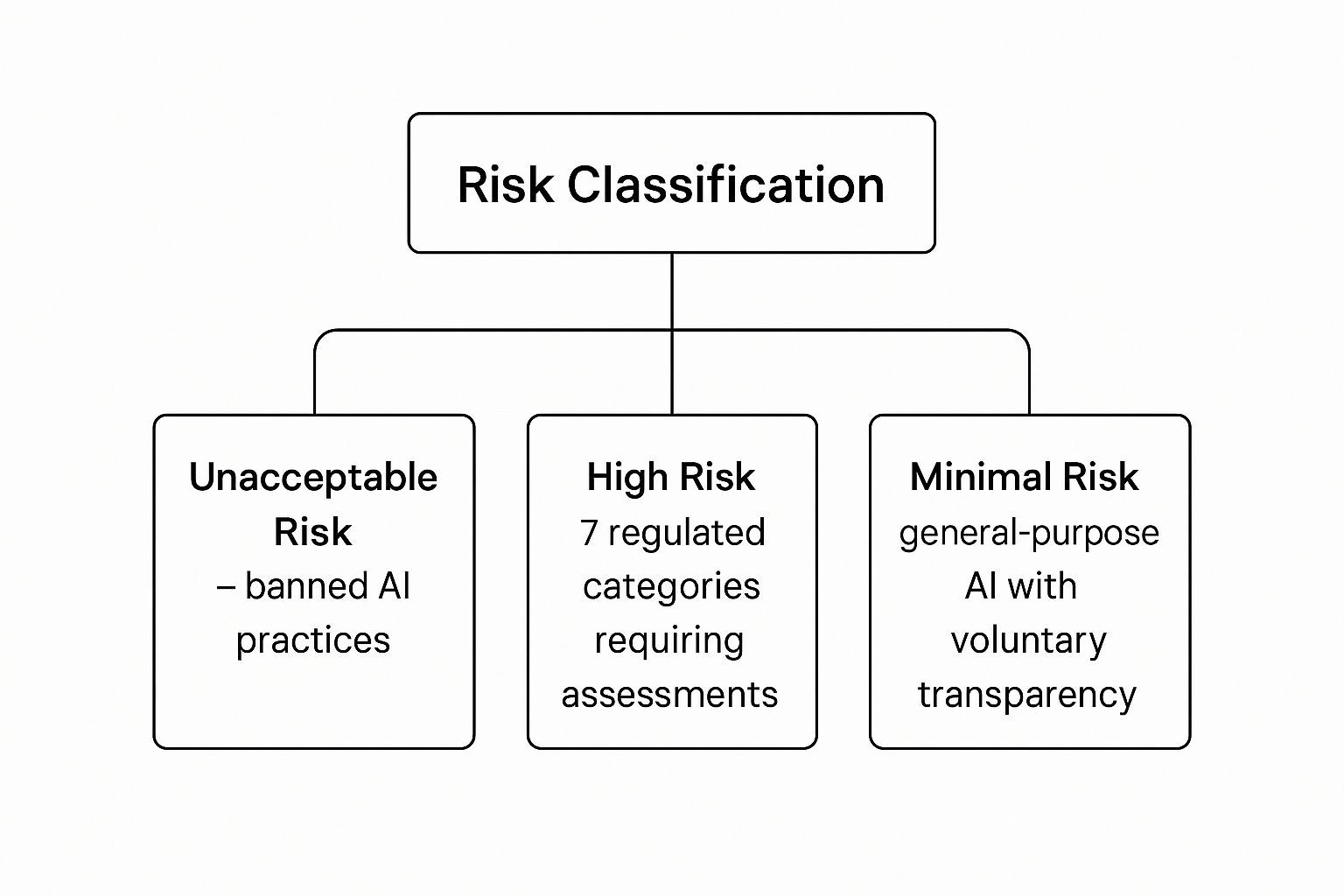

The Act applies this same logic, sorting AI systems into four distinct categories. Each tier is based on the potential risk a system poses to our health, safety, and fundamental rights. This lets the law be tough where it counts while giving innovation breathing room in lower-risk areas. Figuring out which category your AI falls into is the absolute first step toward compliance.

This visual gives you a great at-a-glance summary of the EU AI Act's risk pyramid, breaking down the relationship between the different tiers.

As you can see, the framework is built to put the tightest controls on the fewest applications—the ones with the highest potential for damage. This leaves the vast majority of AI systems free to grow and develop without heavy-handed oversight.

To make this clearer, the table below breaks down the four risk levels, showing what kinds of AI systems fit into each and what's expected of them.

EU AI Act Risk Levels and Requirements

| Risk Level | Examples of AI Systems | Primary Regulatory Obligation |

|---|---|---|

| Unacceptable | Social scoring by governments, real-time remote biometric identification, manipulative or exploitative AI. | Outright Ban |

| High | AI in medical devices, recruitment software, critical infrastructure management, law enforcement tools. | Strict Compliance: Must meet rigorous requirements for risk management, data quality, transparency, human oversight, and cybersecurity before market entry. |

| Limited | Chatbots, deepfakes, AI-generated content. | Transparency: Users must be clearly informed that they are interacting with an AI system or viewing synthetic content. |

| Minimal | Spam filters, video game AI, inventory management systems, recommendation engines. | No new legal obligations. A voluntary code of conduct is encouraged. |

This tiered system ensures that the regulatory burden matches the actual risk, focusing intense scrutiny where it's most needed.

Unacceptable Risk: The Banned AI Practices

At the very peak of the pyramid are AI systems that pose an unacceptable risk. These are applications the EU considers a direct threat to people's safety, livelihoods, and fundamental rights. As a result, they are banned outright.

There’s no gray area here. No compliance checklist can make these systems okay—they simply are not allowed in the EU.

This category includes AI that: * Deploys manipulative techniques: Any system using subliminal tricks to distort behavior and cause physical or psychological harm. * Exploits vulnerabilities: AI designed to take advantage of a person’s age, disability, or social or economic situation. * Enables social scoring: Systems used by public authorities to assign a "social score" that leads to unfair treatment. * Uses real-time biometric identification: The use of real-time remote biometric identification in public spaces by law enforcement is banned, with only a few very narrow exceptions for severe crimes.

High-Risk: Strict Rules for Critical Systems

The next level down is for high-risk AI systems. These aren't banned, but they face a mountain of strict legal requirements before they can ever hit the market and must be monitored throughout their entire lifecycle. These are systems where a mistake or failure could have a devastating impact on someone's life.

High-risk AI is all about context. The algorithm itself isn't inherently risky, but its application in a critical area like medical diagnostics or hiring makes it so. The Act focuses on the 'how' and 'where' an AI is used, not just the 'what'.

The Act spells out specific use cases that automatically fall into this category, including AI used in: * Critical infrastructure: Think systems managing our water, gas, and electricity grids. * Education and vocational training: AI used to score exams or decide who gets into a program. * Employment and workforce management: Tools that screen résumés, monitor performance, or make promotion decisions. * Law enforcement and justice: AI used to evaluate evidence or predict the likelihood of re-offending. * Medical devices: Software that assists with a diagnosis or guides a surgeon’s hand.

Limited Risk: A Focus on Transparency

The limited risk category is all about one thing: transparency. The principle is simple—people have a right to know when they are dealing with a machine. The obligations here are straightforward and designed to prevent deception.

If your AI system fits into this category, you just need to make sure users know they aren't interacting with a human.

This covers common applications we see every day: * Chatbots: Customer service bots must clearly state they are AI. * Deepfakes: Any AI-generated audio, image, or video that looks like a real person or place must be labeled as fake. * AI-generated content: Articles or posts about public interest matters generated by AI must be disclosed as such.

Minimal Risk: Freedom to Innovate

Finally, we have the largest category by a huge margin: minimal risk. The vast majority of AI systems in use today fall in here. These are applications where the risk to a person's rights or safety is basically zero.

We're talking about AI-powered spam filters, recommendation engines on Netflix, or the non-player characters in a video game. The EU AI Act imposes no new legal obligations on these systems. Companies are free to develop and use them without regulatory hurdles, allowing innovation to thrive where it poses little to no threat.

Getting to Grips with the EU AI Act's Timeline

Getting compliant with the EU AI Act isn't an overnight task. It’s a journey with a very deliberate, multi-year roadmap. The regulation rolls out in stages, giving everyone a chance to get their ducks in a row. This isn't just about delaying the inevitable; it’s a practical recognition that different rules require different levels of preparation.

Think of it like building a house. You don't start with the roof. You lay the foundation, put up the frame, and then worry about the paint colors. The AI Act is structured the same way, starting with outright bans on the most dangerous AI before layering on the more detailed compliance frameworks for high-risk systems.

A Phased Rollout Over Three Years

The full implementation timeline stretches over three years, starting from its publication in July 2024. After the Act officially entered into force in the summer of 2024, the first real changes will kick in six months later. That's when the prohibitions on 'unacceptable risk' AI systems—things like government-run social scoring or subliminal manipulation—become enforceable.

From there, other key pieces fall into place. Requirements for 'high-risk' AI and transparency rules for general-purpose AI will follow, with most rules active within 24 months. This staggered approach is explored in great detail in this in-depth mid-2025 round-up.

This isn't an arbitrary schedule. The idea is to tackle the biggest threats to our fundamental rights first, all while giving businesses a clear and predictable calendar to adapt their technology, update their processes, and build new governance structures without grinding everything to a halt.

Key Dates for Your Compliance Calendar

To stay ahead of the curve, you need to know the key deadlines. While the final deadline for high-risk systems is the big one, several other important dates come much sooner. These are the checkpoints on your road to full compliance.

Here’s a quick look at the major phases from the Act's entry into force:

- Early 2025 (6 months in): The outright bans on unacceptable-risk AI applications take effect. This is the first major enforcement date, targeting the most harmful uses of AI.

- Mid-2025 (12 months in): Rules for providers of general-purpose AI (GPAI) models kick in. This is where things like technical documentation and transparency for foundational models become mandatory.

- Mid-2026 (24 months in): Showtime. The majority of the Act's rules become fully applicable, marking the main deadline for high-risk AI systems to be compliant.

- Mid-2027 (36 months in): The final piece of the puzzle. Rules will apply to high-risk AI systems that are part of products already covered by other EU laws, like medical devices.

- Mid-2029 (5 years in): The rules for high-risk systems used by EU institutions, bodies, and agencies become enforceable.

The staggered timeline isn't just a grace period; it's a strategic plan. It allows the regulatory infrastructure, like the AI Office and national authorities, to be established and prepared before the heaviest compliance burdens kick in.

This phased rollout ensures that by the time the toughest rules are enforced, the entire ecosystem—from developers to regulators—is ready to handle them.

What Is the New EU AI Office?

A central player in this whole process is the newly formed EU AI Office. Housed within the European Commission, this agency is the main enforcer and guide for the AI Act. Its creation is a huge milestone, signaling a shift toward centralized and consistent oversight.

The AI Office has a big job ahead of it. It’s tasked with:

- Overseeing GPAI Models: It will directly supervise the most powerful AI models to make sure they're playing by the rules.

- Coordinating Enforcement: It works hand-in-hand with national authorities in each EU country to ensure the law is applied the same way everywhere.

- Providing Guidance: It will develop practical guidelines and codes of practice to help businesses figure out exactly what they need to do to comply.

Essentially, the AI Office is the linchpin holding the entire regulatory structure together. Its job is to make sure the AI Act is more than just words on paper—it's a practical, harmonized reality that fosters a true single market for trustworthy AI. For any business navigating this new world, the guidance from this office will be an absolute lifeline.

Meeting Compliance for High-Risk AI Systems

If you're building or deploying a high-risk AI system, the EU AI Act turns abstract ethical principles into a concrete, non-negotiable checklist. These aren't just friendly suggestions—they're the mandatory steps you must take to ensure your systems are safe, transparent, and fair from the moment they go live. Consider it the price of entry for doing business in the European Union.

It's a lot like getting a new car approved for the road. Before a vehicle hits the showroom, it has to pass a whole battery of safety tests, come with detailed technical manuals, and include features that keep the driver in control. The Act is applying that same thinking to high-risk AI, demanding a series of checks and balances before these systems can start impacting people's lives.

The Foundation of Compliance: A Robust Risk Management System

At the very core of the AI Act is the demand for a robust risk management system. This isn't a "set it and forget it" task; it's a living, breathing process that you have to establish, document, and maintain for the entire lifespan of your AI system.

The whole point is to constantly identify, evaluate, and shut down potential risks to health, safety, or fundamental rights. This starts on day one, back in the design phase, and carries on through post-market monitoring. It ensures the system stays safe even as it learns or encounters new situations. For teams just getting started, looking into available risk control software can be a great way to build a compliant process from the ground up.

This ongoing cycle of risk assessment is absolutely fundamental. It forces developers to think ahead about what could go wrong and build the necessary safeguards right into the AI's architecture, instead of trying to patch up problems after the damage is done.

Key Obligations for High-Risk AI Providers

Beyond a solid risk management framework, the Act lays out several other crucial obligations. Each one is designed to plug a potential hole where an AI system could fail.

Data Governance: The data you use to train your AI is everything. You have to prove that your training, validation, and testing datasets are relevant, representative, and as free from errors and biases as you can make them. This is the only way to prevent skewed or discriminatory outcomes.

Technical Documentation: Get ready to keep detailed records. You must create and maintain a comprehensive technical file that acts as a blueprint for your AI. It needs to explain its purpose, its capabilities, its limits, and the logic that went into building it. Regulators will ask for this to verify you're compliant.

Record-Keeping: High-risk systems need the ability to automatically log events as they operate. Think of this as a black box for your AI. These logs are essential for tracing what happened after an incident, helping everyone understand why an AI made a certain call or produced a particular result.

Transparency and User Instructions: People need to understand what the AI is telling them. You are required to provide clear, easy-to-understand instructions that detail what the AI can do, its accuracy levels, and any risks users should be aware of. No black boxes allowed.

Human Oversight: This is a big one, and it's non-negotiable. Every high-risk system must be designed so a human can effectively watch over it. This means building in "human-in-the-loop" measures that allow a person to step in, shut the system down, or override a bad decision.

Accuracy, Robustness, and Cybersecurity: Finally, your AI has to be reliable. It needs to perform consistently and accurately, be tough enough to handle errors or unexpected situations, and be secure against hackers trying to manipulate it. This means cybersecurity can't be an afterthought.

The EU AI Act’s compliance requirements are designed to build a chain of trust. High-quality data leads to a more reliable model, which is explained by clear documentation, monitored by robust logging, controlled through human oversight, and protected by strong cybersecurity.

The Conformity Assessment: A Gateway to the Market

Before you can launch any high-risk AI system in the EU, it must pass a conformity assessment. This is the final exam—the official check to prove that your system meets every single legal requirement in the Act.

The assessment process is there to verify that your risk management system is active, your technical documentation is complete, and all your other duties have been met. For most high-risk systems, you'll conduct this as a self-assessment. However, for a few extremely critical applications (like remote biometric identification), you'll need a third-party "notified body" to sign off on it.

Once your system gets the green light, you can affix the CE marking to your product. This little symbol is a big deal. It's your declaration that the AI complies with EU law, giving you the legal right to sell and operate it across all 27 member states. It tells customers and regulators alike that your system has met some of the highest standards for AI safety in the world.

Enforcement, Penalties, and Governance

The EU AI Act isn’t just a friendly suggestion; it’s a legally binding framework with serious consequences for getting it wrong. The regulation backs up its rules with a strong governance structure and a tiered penalty system that has real financial bite. For any organization with a footprint in the EU market, understanding how this works is non-negotiable.

The penalties are deliberately steep, designed to make companies think twice before cutting corners. These aren't just slaps on the wrist. Fines can reach eye-watering sums, calculated as either a specific amount or a percentage of a company’s total worldwide annual turnover—whichever figure is higher.

This structure makes sure the punishment fits the crime, scaling from minor administrative mistakes all the way up to deploying prohibited, dangerous AI. The potential financial hit alone makes a proactive compliance strategy an absolute must.

A Breakdown of the Fines

The penalty system is broken down into clear tiers, so there’s no ambiguity about what’s at stake.

Violating Banned AI Practices: This is the most serious offense. Using an AI system from the "unacceptable risk" category will cost you. Companies face fines of up to €35 million or 7% of their global annual turnover.

Non-Compliance with Key Obligations: If you fail to meet the core requirements for high-risk systems—things like proper risk management, data governance, or human oversight—the fines can reach up to €15 million or 3% of global turnover.

Supplying Incorrect Information: Don't think you can mislead regulators. Providing false, incomplete, or deceptive information to authorities comes with its own penalty: up to €7.5 million or 1.5% of global turnover.

These fines are intentionally severe. It's the EU's way of signaling just how serious it is about building a trustworthy AI environment. The message is simple: the cost of ignoring the rules will far exceed the cost of building safe, transparent AI from day one.

The Governance and Enforcement Structure

Enforcing this landmark regulation isn't a one-person job. It’s a coordinated effort between a central EU body and individual national authorities. This two-pronged approach ensures the law is applied consistently across all 27 member states while still tapping into local expertise.

At the center of it all is the European AI Office. This new body, housed within the European Commission, has a big job. It’s in charge of overseeing the most advanced general-purpose AI models, creating standards and guidelines, and making sure the national regulators are all on the same page.

Working alongside the AI Office, each EU member state will appoint its own national supervisory authorities. Think of these as the boots on the ground. They’re the ones conducting market surveillance, investigating potential breaches, and handing out penalties to companies in their jurisdiction.

Together, these bodies create a complete enforcement network. To see how these roles interconnect, you can check out our detailed guide on AI governance, compliance, and risk. This collaborative model ensures the AI Act is enforced effectively and consistently, giving businesses a predictable legal landscape to operate in.

Your Questions About the EU AI Act, Answered

As the world's first major rulebook for artificial intelligence, the EU AI Act is sparking a lot of questions. It's a complex piece of legislation, and figuring out what it means for your business, your development team, or your compliance strategy is the first step to getting prepared. Let's break down some of the most common questions we hear.

We’ll get straight to the point on big topics like the Act’s global reach, how it tackles the powerful foundation models behind tools like ChatGPT, and the big debate: is this a roadblock or a runway for innovation? Getting these answers right will help you navigate this new territory with confidence.

Does the EU AI Act Apply if My Company Isn’t Based in the EU?

Yes, it almost certainly can. This is one of the most important things to understand about the Act—its reach is global.

The regulation has what’s known as extraterritorial scope. In simple terms, this means its rules apply far beyond the physical borders of the European Union. The critical factor isn't where your company is located, but where your AI system is used.

If you sell an AI product on the EU market, provide an AI service to people in the EU, or even if the output of your AI is used in the EU, you're on the hook.

Imagine a US-based software company selling AI-powered hiring tools to a German corporation. That company must ensure its product fully complies with the AI Act’s high-risk rules. It doesn’t matter that the provider is in America; what matters is that the customer is in the EU.

This global reach effectively sets a new international standard. Any company wanting to do business in the massive EU single market will need to align their AI development and governance with these rules.

How Does the Act Handle General-Purpose AI Models?

General-purpose AI (GPAI) models—often called foundation models—get their own special set of rules. Think of the powerful, flexible models that power tools like ChatGPT or Midjourney. Because they can be adapted for thousands of different uses, they don't fit neatly into the standard risk categories.

The Act tackles this with a clever two-tier system.

Baseline Rules for All GPAI Models: Every GPAI model provider has to meet basic transparency requirements. This means creating detailed technical documentation, giving clear information to developers building on their model, and having a policy to respect EU copyright law.

Stricter Rules for GPAI Models with Systemic Risk: A small number of the most powerful models are labeled as posing "systemic risk." This happens if the model was trained using an enormous amount of computing power (over 10^25 FLOPs) or if the European Commission specifically designates it as such. These models face much tougher obligations, like performing model evaluations, assessing and mitigating potential risks, reporting serious incidents, and maintaining rock-solid cybersecurity.

This approach ensures the most powerful and potentially impactful models get the highest level of scrutiny, without bogging down smaller or more specialized ones.

Will the EU AI Act Stifle Innovation?

This is a common worry—that strict rules will slow down progress and put European companies behind. But the EU's goal is actually the opposite: to foster trustworthy innovation.

By creating a single, clear set of rules for all 27 member states, the Act gives businesses legal certainty. Instead of trying to navigate a messy patchwork of different national laws, companies now have a predictable framework to work within. That clarity is a huge plus for anyone looking to make long-term investments in AI.

Plus, the risk-based approach is crucial here. The vast majority of AI systems will fall into the minimal risk category and face no new rules at all. This gives developers complete freedom to experiment and build in low-stakes areas. The heavy-duty requirements are laser-focused only on the few applications where people's health, safety, or fundamental rights could be at risk.

The thinking is that by building a reputation for safe, reliable AI, Europe can increase public trust, which ultimately drives wider adoption and creates a stable, thriving market for innovative AI.

Navigating the EU AI Act can feel like a maze, but you don't have to figure it out alone. ComplyACT AI offers a specialized platform that guarantees compliance in just 30 minutes. We help you auto-classify your AI systems, generate the required documentation, and stay audit-ready. Avoid massive fines and make sure your business is prepared by learning more at the official ComplyACT AI website.